VMware PEX is on this week and we’re thrilled to be a part of it. We had a very exciting announcement go out about the unveiling of an all-flash VSAN solution, and wanted to share some additional information on configuration and testing results

As you likely already know, VSAN 1.0 supports a hybrid storage environment in which the cache layer needs to be designed using flash (SSDs) while the capacity layer is configured using magnetic media (HDDs). In previous blogs, I discussed configuring an all-flash in VSAN 1.0 by marking the SSDs as HDD. However, this is not officially supported.

With the upcoming release of vSphere 6.0/VSAN 6.0, VMware will support an all-flash VSAN configuration. And this is absolutely great news for VSAN users. It not only allows users to “pick and utilize” different SSDs (and you can take a look at our broad portfolio of SSDs to get a sense of the breadth of choices you have) but it also gives us at SanDisk® the opportunity to showcase the real benefit of flash drives.

In this blog I’m happy to share with you some of our early testing, configurations and results.

All-Flash VSAN Testing Configuration

There are many workloads that are a good candidate for an all-flash VSAN, and VDI is certainly a prominent one. For this series of testing we decided to begin with business application workloads. Documentation of additional workloads will soon follow, and I’ll share those results on our blog as well.

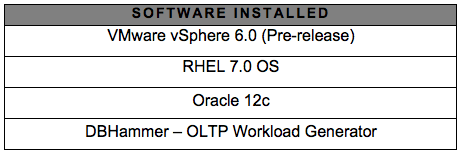

We have built a 4-node all-flash VSAN with a pre-release build of vSphere 6.0. The following table shows the hardware and virtual machine (VM) configuration of our test bed:

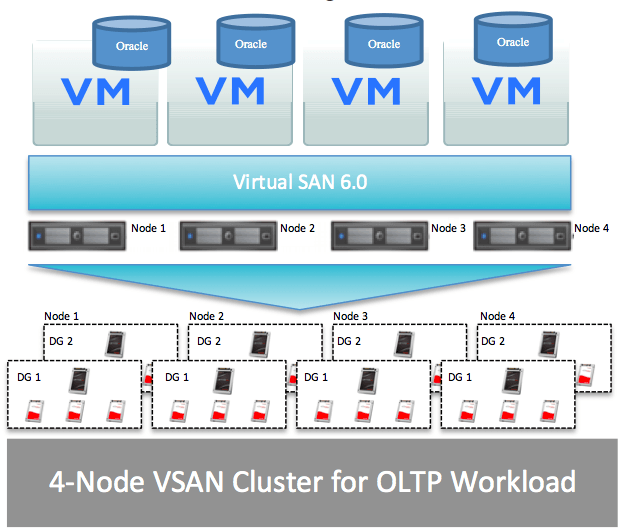

Figure 1.4 shows the VSAN architecture used for this testing:

As you can see in the above picture, 4 VMs are running in a 4-node VSAN cluster. Oracle 12c is installed in each VM; Oracle data disk is configured using ASM and HammerDB-OLTP workload generator used to run TPC-C like workload which simulates operations similar to those of purchase orders in e-commerce applications.

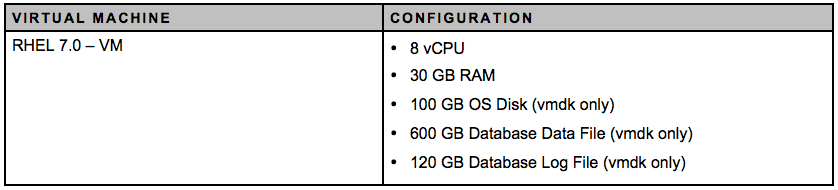

Each node is configured with 2 Disk Groups (DG) using SanDisk Lightning Ascend Gen II 12G SAS SSDs and CloudSpeed SATA SSDs as shown below:

All-Flash VSAN Testing Results

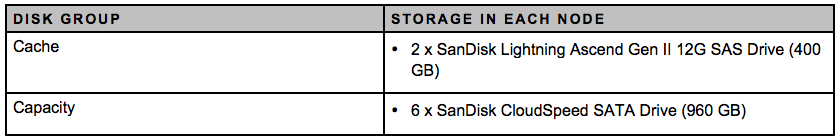

We decided to run these tests for a 1 hour duration and to measure the Transactions per Minutes (TPM) delivered by each VM. The VMs are configured so that tests are started at the same time and run for an hour. The TPM values are collected from the HammerDB log file generated at the end of the test.

The following image shows the steady state HammerDB TPM during the test run:

As you can see, 4 VMs could generate more than 2 million TPM. The TPM values are consistently at the same level during the test run and do not show any drop or spike. HammerDB captures the TPM value including ramp up and down time of the test which reduces the total TPM in steady state to an extent.

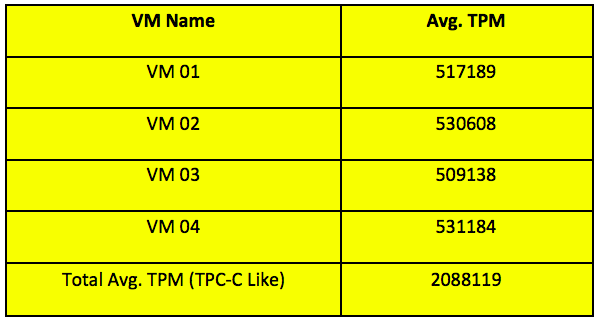

The below table shows the HammerDB average TPM after the completion of the 1-hour test:

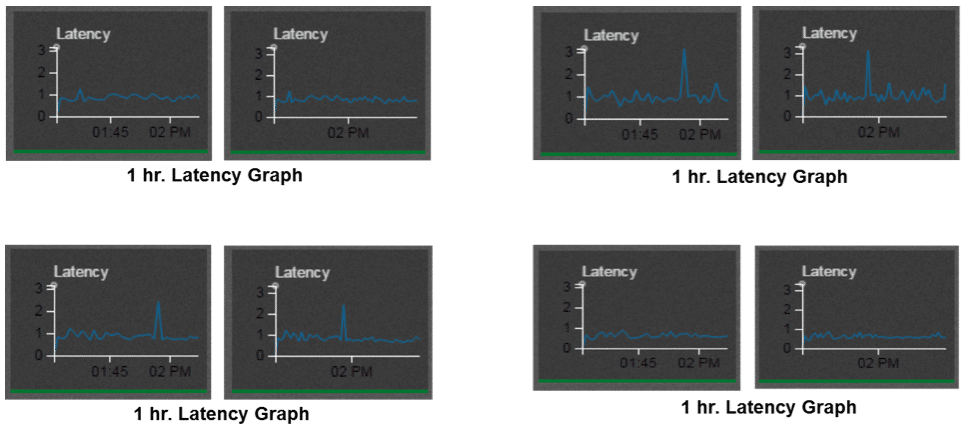

To further examine performance, we measured the VSAN disk latency during the test run and captured the results using VSAN observer:

As you can see, the latency is below 1 millisecond with just one spike during the 1 hr. duration. This consistent sub-millisecond latency shows how the VSAN all-flash configuration can deliver consistent performance to maintain desired application SLA.

Conclusion

All-flash VSAN is the beginning of a new hyper-converged storage era. Our testing results reach up to 2 million TPM, and there is even a further scope of optimizing such as scale up/out more VM in the 4-node cluster to drive more TPM. The vSphere hosts CPU, memory and VSAN all-flash configuration can have even more VMs in the environment.

We are currently in process of optimizing this new environment and I hope to share with you the next set of results soon on this blog.

If you have any questions, you can reach me at biswapati.bhattacharjee@sandiskoneblog.wpengine.com, or join the conversation on Twitter with @SanDiskDataCtr.

Acknowledgement

I would like to thank Rawlinson Rivera, Sr. Technical Marketing Architect and Rakesh Radhakrishnan Nair, Sr. Product Manager from VMware in educating and sharing valuable information while building the pre-release all-flash VSAN.