6 Ways to Optimize the Cost of Your Big Data Platform

Western Digital has been on its Big Data Platform journey for six years. We have learned a tremendous amount, particularly about the investments we need to make in technologies and tools and capabilities, and their cost. Here are a few key learnings from JuneAn Lanigan, the global head of enterprise data management.

How Western Digital Built an Efficient Data Infrastructure

Hi, my name is JuneAn Lanigan and I’m the global head of enterprise data management at Western Digital. I’m really excited to be here today to tell you about our journey of building out our big data platform and ecosystem over the past six years. We’ve definitely learned a lot; we placed a lot of bets; we’ve forged into new territories. Where we are today is that we have a best-in-class big data platform that enables analytics across all of our product engineering, product development, manufacturing, and product quality analytics teams.

As we built this platform, one of the focus areas for us has been around futureproofing – how do we ensure that we’re building a platform that not only satisfies the business needs today, but anticipates those needs of the future. We’ve done a lot of work with the business to try to dream with them on where they’re going to go and to help inform us on how to build an architecture that supports that. The other thing we really wanted to focus on is the cost. The investments that we need to make and how do we optimize on those investments as we futureproof to make sure that we can scale.

Today, I’d like to just highlight some of the areas of costs that have been our major focus.

1. Third-Party vs. In-House Expertise

One of the first areas of focus for us was making a decision on where we were going to host this platform – was it going to be on the cloud, was it going to be on-premise, was it going to be a hybrid of that? The reality was that our internal experience was on-premise data warehouses, not big data platforms, and so we opted, at that point, to leverage external expertise. [As a result,] we actually built our platform on a hosted environment where we just brought the data because we knew the data, but we didn’t know the rest of the technology requirements.

The other [area of focus for us was] that we hired third-party data science consultants, as well, because [as mentioned] we know our data, but we don’t we didn’t know how to bridge it together into a connected view across all of our manufacturing sites. So, that was very intentional, and I have to say that intention at every stage [is very important] – just because you make a decision in the beginning doesn’t mean you have to stay there.

PanelCast – How Data is Changing the Face of IT

What we learned is that we were expertise-based on this platform. We knew our data, we knew the way the engineers needed to use it, so we made a decision, at a point in time, to bring it into our own virtual private cloud and rebuild those platforms — the pipeline’s, the data pipelines – the way we now experience that they were being used, and that was very cost-effective for us.

2. Data Ownership and Hosting

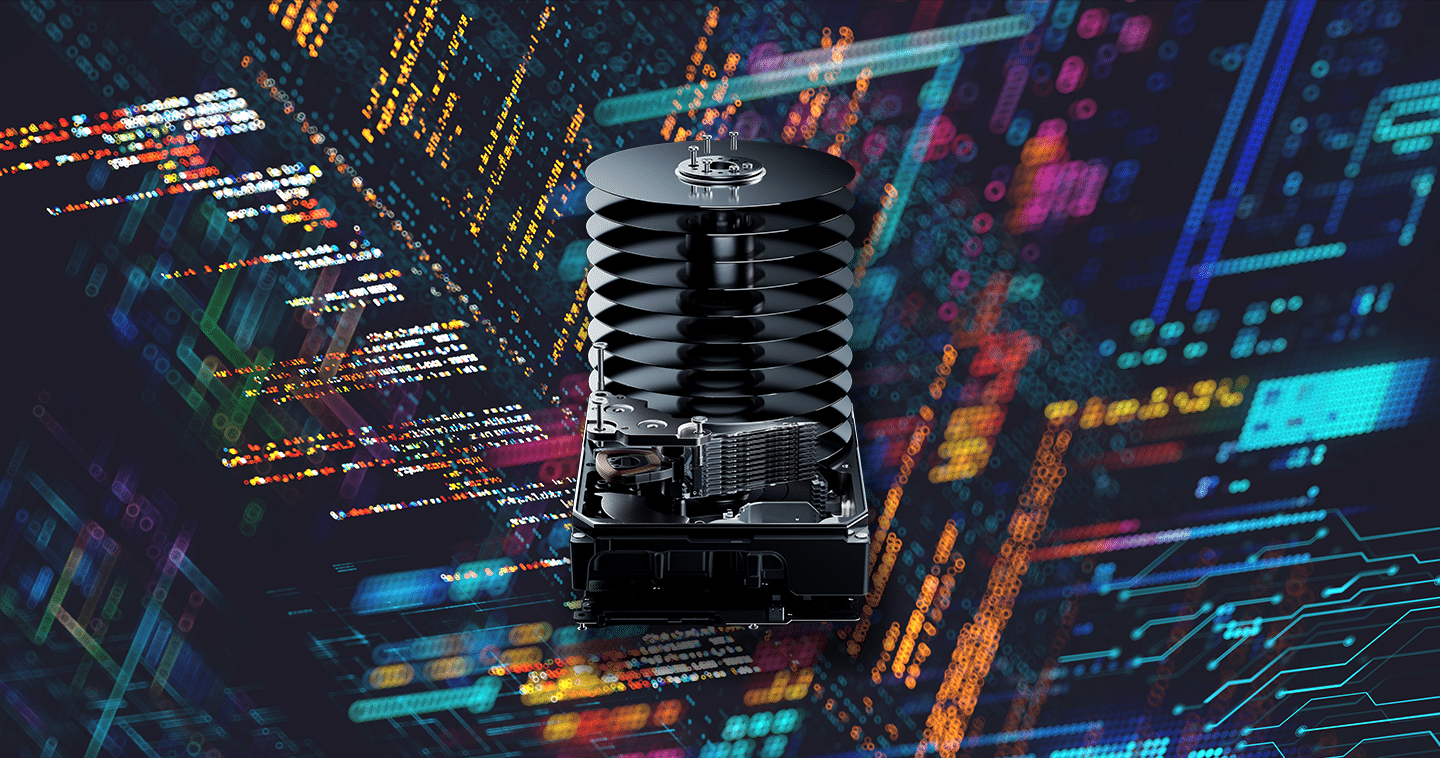

Another focus area for us was the cost of data ownership. The way I think about that is when you implement your data into a cloud environment, number one it’s expensive to store all your data in that environment, but what we found is that it’s also very expensive to get your data out of that environment. So, we partnered with our Data Center Systems group and implemented ActiveScale™, which is an S3 object store, in our Las Vegas data center.

So, we actually started to build out that hybrid environment where we had all the data come from our factory into our Las Vegas data center on ActiveScale, and then we only brought to the cloud the data that we actually needed. We were able to convert 10 years of data from tape onto ActiveScale. We have about five petabytes of data on our ActiveScale system, and then only bring 18 months to 24 months of data onto the cloud because that’s all that the engineers need for analysis. We also built in capabilities where on-demand they could ask for more data, [which] really saved us about $600,000 a year just by having our data and all the data that we want to have in our environment, [while] only sending the data we need to have into the cloud.

The other thing about that is that when you have what we call “that golden copy” – all of our data in one environment – then we’re not really beholden to any service provider –we could go to a different cloud if we felt it was necessary or appropriate in [the] futureproofing of our platform. To come completely on-premises, we could do that, as well. That’s another thing to consider when you’re thinking about costs.

3. Data Quality

Another point to consider – is the cost of data quality. Data quality is definitely in the eyes of the beholder, and so we constantly want to look at, the mentions of quality, right accuracy, completeness, timeliness, etc.

What we found is that because of the way we had architected our system, we were bringing the data directly from the factories through the network through buffer servers to the cloud, and whenever there was a hiccup in the network, we would get corrupted data. [As a result,] it was expensive, but we constantly had to – what we called –repair that data in order to provide it to our users at a level of quality that they expected. We were really beating about 85% of our threshold. We were not giving them the level of quality that they wanted and therefore, sometimes, they would walk away because they didn’t trust the data. We had to solve this problem, and again what we did was we implemented ActiveScale in our Las Vegas data center and instead of bringing that data through the buffer servers we brought it direct. We streamlined it directly from the factory into ActiveScale. Then, from there, we could bring, again, just the data we need up into the cloud.

The other thing I have to say around cost of data quality is that we are required to store 10 years of data and all of that data was on tape backup. We used Western Digital G-Technology® shuttle technology to also convert that data and bring it from the factories into our ActiveScale. So, now we truly have a “golden copy” of all of the data and we know it’s at the quality that is trusted and usable by anyone who needs access.

And, lastly I just like to wrap up with thinking about three other areas of cost optimization.

4. New Technologies

One is around technology and placing your bets. One thing that we did is we decided to build out an engineering team for our Big Data Platform. [They really were the] innovators around how do we optimize on the features of the cloud, as well as the features of all of the software investments that we had made. So, I think by understanding the business and the way they need to use them, and exploring and having that opportunity to really investigate new technologies, that was key.

5. Software Agreements

The second is, that you know because we were so distributed, we had lots of analytical tools with lots of licenses across many many countries negotiated independently, and being able to –now with an enterprise platform – negotiate enterprise agreements for that software, that was key.

6. Scaling Internal Knowledge

And, then the last I would just say is building up our own expertise and being able to transition from external consultants into really building out the expertise within our own team was definitely cost effective and it’s scalable for us.

Big Data Platform — Lessons Learned

To wrap up, as we’ve been on this journey for six years, we have learned a tremendous amount. We’ve learned about the investments we need to make in the technologies and tools and capabilities, and we’ve learned about the costs of those investments, as well.

We’re constantly looking to the future – how do we scale our environment? And, as we look at scaling, we look at those technologies, obviously, in emerging technologies, but we’re always also looking back to say how do we do it better? How do we optimize the investments that we’ve made and get more and more out of it? So, that’s a definite part of cost within your platform and always be thinking cost optimization.

How Data is Changing the Face of IT

Join JuneAn Lanigan in a live video discussion with experts in data and learn what you can do to leverage the latest and greatest data management solutions. Save your seat for the Sept. 12 PanelCast hosted by ActualTech Media.