5 Tips for Building a More Efficient Data Center

For years storage has been viewed as the important but slightly boring cousin to processing power and applications. With the onslaught of performance and value brought into the fold by Enterprise NAND devices the situation has changed drastically. We now have technology to alleviate storage bottlenecks that have plagued the industry for years. We as an industry have created such an opportunity for change in the data center that education on what’s possible hasn’t caught up with the positive change we can now provide.

As a result of the massively changing storage landscape, we can use NAND to do so much more than simply speed up the storage subsystem. We are past simply making storage faster. We can alter the data center infrastructure by removing age old components such as hard disk drives, creating a data center that takes up a fraction of the space a previous generation of data centers needed. We can provide a solution with less points of failure, or boosting efficiency on existing data centers that in the past would have needed to be retired from service or retooled. In other words, if you ever wanted an opportunity to participate in an industry changing event, you have your chance now!

Let’s talk about 5 things we can do to build a more efficient data center using NAND.

1. Understand Your Workload

One of the biggest mistakes you can make is to build a solution based on wrong assumptions. One of the most commonly made mistakes is to build with excessive margin based on incorrect workload assumptions.

[Tweet “Looking for a more efficient data center in 2016? Here are 5 tips to get you there.”]

In a design built around HDDs, we may add more spindles than needed to cover incorrect IOPS requirements, thus wasting purchased capacity. With SSDs, make sure to understand the balance of performance and write endurance. I once assisted a customer in determining their workload to help make the decision on buying a 10 write fill per day device versus a 3 write fill per day device. The customer actually needed a .5 fill per day device. Actual workloads may surprise you. In the example above the solution needed was less than half the cost of what was planned by going with a lower endurance device.

2. Understand the “Access Density” Needed and Which Solutions Can Provide It

We are referring to IOs per second per GB here. This statistic can mean the difference between short stroking an HDD, using an all flash array or using direct attached flash in the server. It can also affect the type of protection a solution uses (ex.. RAID 10 versus RAID 5) or the type of SSD chosen.

Clearly access density can lead the user to one product over the other as well as affect the data protection mechanisms put in place. While the all flash array has the single largest performance number it isn’t necessarily the proper choice for fast applications because the performance is shared across a large capacity point. The ratio of controllers versus capacity coupled with the parallelism that comes with it is the single most important access density point to remember when deciding what to build and why.

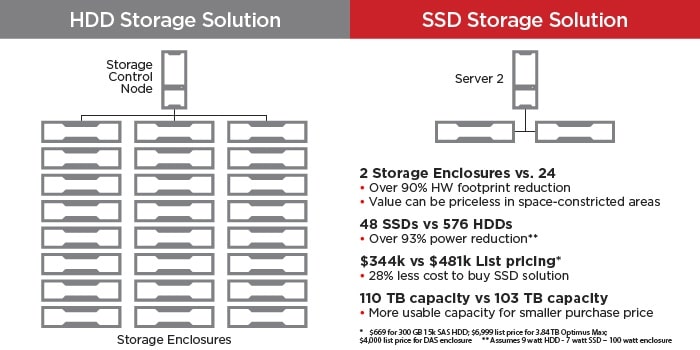

3. Hardware Consolidation is Your Friend!

Low cost NAND devices with large capacity points can allow SSDs to help consolidate datacenter HW. Comparing cost per GB of devices is too simplistic. Shrinking the HW footprint allows for removal of enclosures and other support infrastructure which changes the “solution cost per GB” to a larger extent than device comparisons will illustrate. Please be aware of tips 1 and 2 above and know your workload here as mileage on this type of consolidation will vary based on ratio of large block streaming versus small block randomness of your data. Example of creating a +100 TB dataset accessing small block data with SSDs and HDDs:

4. Don’t Get Lost In the Components of the Data Center

Think of the data center as a Norton’s or Thevenin’s equivalent circuit! It doesn’t matter what components you use as long as you get the proper responses to the given inputs. This allows for a complete reimagining of the data center. Use components that allow for simpler solutions.

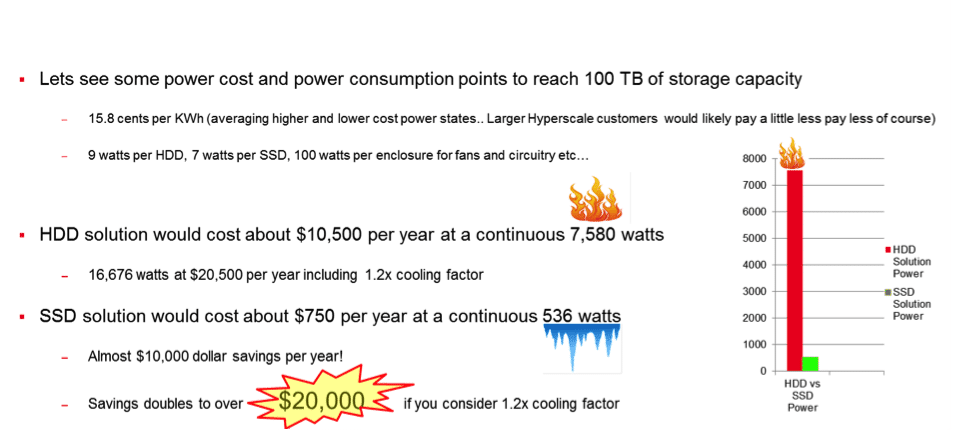

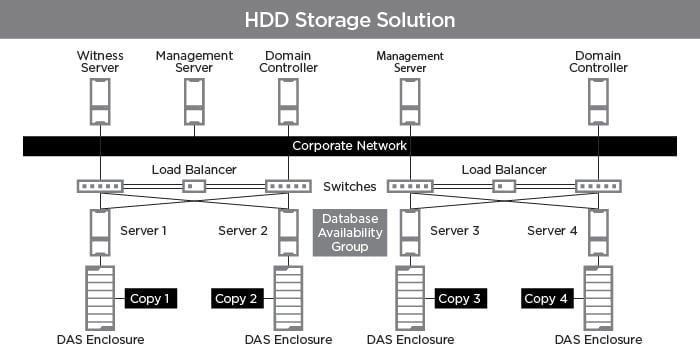

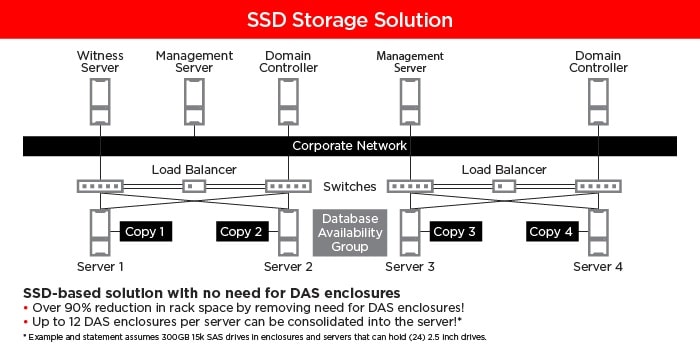

Let’s look at this example:

Clearly, the SSD solution provides the capacity/performance needed at a similar price, but also results in massive operating cost savings!

This example reduces DAS enclosures by replacing HDDs with high capacity SSDs for a better, simpler solution!

5. Question Everything

Don’t simply build “the same as last time”. Question everything. Cost, capacity, level of protection, and performance, affect what you build. A RAID 10 HDD solution is used because HDDs don’t provide enough raw performance for RAID 5 parity overhead. SSD performance margins may allow for the user to move to a capacity efficient RAID 5 solution.

An application may have no use for SSD levels of performance but can benefit greatly from it during failure scenarios. SSDs have performance margin to spare and may actually handle the rebuild operations and the application needs simultaneously. The user can now reach such capacity points per server that they may be able to remove the more costly construct of a SAN altogether. Once again, know your workload to decide.

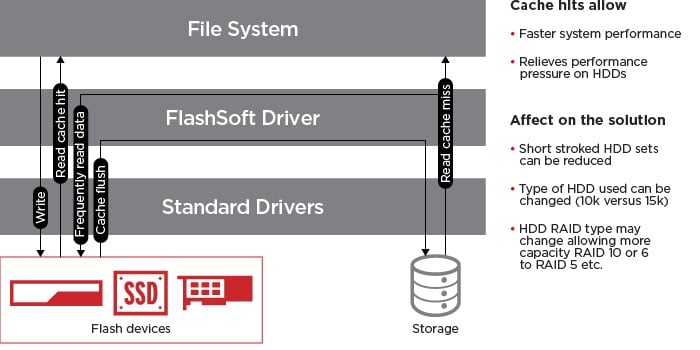

When replacing a SAN, another point to ponder is why are you replacing it? If it is a performance limitation, we may give it an extended life by adding a flash component?

Older storage arrays can continue on and new arrays can be built with lower cost drives with a boost from SSDs as a cache or tier, inside of the SAN or inside of the attached server.

Ready to learn more? Have some questions? Register for our free webinar:

5 Tips for Building a More Efficient Data Center

Oct 21 2015 10:00 a.m. PDT

Register Here

//