Flash and Containers? A Look at Docker Technology

The industry is buzzing about containers! I thought it’s time for me to take a closer look at containers and share how I see flash improving storage performance and helping containers’ success story.

The first technology I looked at was Docker. What is Docker? “Docker allows you to package an application with all of its dependencies into a standardized unit for software development.” (Read the full introduction on docker.com/whatisdocker)

As I see it, Docker is all about containers that borrow a required amount of code from the base operating systems and fork out a small piece of runtime environment for a particular application. Unlike in virtual machines where there is a single application and one operating system, in the case of containers, a single operating system is shared as multiple containers to run different applications within the same operating system. These containers copy some of the libraries/functions/binaries from the parent Operating System and create a container. It’s important to understand that these containers are not an OS but just a runtime environment which helps running the application on top of it.

Containers vs. Virtual Machines

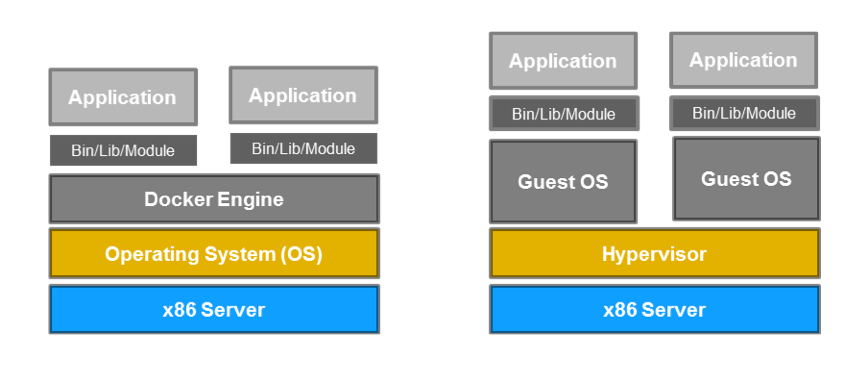

At a high level, here is how a Docker environment looks in comparison to a virtual machine:

Wait! Does this mean a technology such as Docker needs to be in bare-metal and single server? Not necessarily. It could be in a hypervisor environment and scale out to many servers. For simplicity, I wanted to use this diagram to show how the application is stacked in either case.

At a very high level, they look same. The key difference is the footprint, but I would add ease of management as well. Containers are multiple times smaller than actual Guest OS and for that matter smaller than JeOS (Just Enough Operating Systems) used for appliances.

Though the containers are running inside one master image, they all have properties such as encapsulation, isolation etc. that a traditional VM carries. Such properties and capabilities are enabled by the Docker Engine. These features, alongside a smaller footprint, make it both interesting and promising from the perspective of enterprise application deployment.

Data Storage and Enterprise Application Needs

From my understanding, in Docker 1.0 once containers are deleted there is no way to store their data. However, with Docker 2.0 the concept of shared storage is in place, which means even though the containers are deleted, the data can be stored for later use, and is persistent. This makes it more promising and ready for real use cases.

Additionally, I see containers storage requirement similar to those of VDI. Don’t misquote me here – I understand the I/O demand of Docker workloads could be far different than what VDI requires, but they could also be similar in some use cases. Let me try to explain why I see these as similar workload types.

Docker containers are more like linked clone images of VDI. As we increase the number of desktops in VDI, a new linked clone is created from the replica or master image. The Docker container behavior is the same in that regard. Each time a new container is spawned, it requires a piece of the OS (specific to application need) to create its own micro OS to run a particular application.

What Does It Mean From A Storage Perspective?

Very high read IOPS are required as we spawn more and more containers. This is more like a boot storm scenario of VDI. I am trying to draw this analogy in order to be able to compare this technology behavior to an existing one.

Flash is a key enabler to solve boot storm bottlenecks in VDI. If you’re not familiar how flash can benefit there take a moment to read this blog. In this same manner, it is implied that the provisioning of containers should fall into the same category. Each time a container is created, it reads from the master image and gets required piece of code to create the runtime environment for that application. As you create more and more containers simultaneously, master image has to support all the reads generated by containers. Hence the VDI boot storm analogy.

Applications Running inside Containers

Another place I see flash as an enabler is steady state; when a particular workload is running inside a container. Each container will have its own application and unique I/O need. That means there will be a random I/O need at the storage layer with varying unique needs of each application. As you know, flash storage shines when it comes to random I/O. And I see flash is the answer here, too.

Patching Containers

Finally, going back to my VDI analogy, each of these containers will require some update in their life cycle. This is a perfect case of VDI desktop recompose where read and write mix of an intense I/O profile are needed. I still need to learn more about container patching, but my guess would be that flash would be the answer here as well.

Moving Forward

Users care about their application; and in the era of cloud more and more applications will be created and deployed in containers. Be it Docker container or any other container such as Microsoft, VMware or other micro/mini servers (containers), the storage behavior would be more or less same. Without flash storage the success of provisioning or steady state usage of containers seems doubtful.

Furthermore, these deployments will not be as simple as I have described above. The scale out and distributed nature of applications and storage will demand more capable storage moving forward. Software Defined Storage (SDS) is the next generation of storage, and as I often mention and write on this blog – flash is at the heart of SDS.

What are your experiences with containers and storage demands? Let me know in the comments below.