NVMe-oF™: Will Fabrics Change SSDs?

The proliferation of NVMe™ devices in the data center has catalyzed the adoption of NVMe over Fabrics (NVMe-oF™). Yet now, as NVMe-oF matures, it may need us to go back and rethink how we architect the integration of fabrics and SSDs.

NVMe — Current State of Affairs

As NVMe penetration across public and private cloud infrastructure increases, it helps data center architectures to shift from scale up systems to commodity scale out systems, opening up new paradigms of distributed computing. As NVMe storage specifications and features further evolve, they put a spotlight on system efficiency, scalability, and utilization challenges. This opened the door for a newer technology to come in response — NVMe-over-Fabrics, or NVMe-oF. Here’s why and what it could potentially mean for underlying architectures.

Current System Architecture Challenges

Data creation and consumption is constantly increasing in the world around us. The challenges brought by the growth of data, alongside the complexity of applications, brings paramount importance to where, when, and how data is stored and processed.

Traditional IT infrastructures are mostly static and designed to handle extreme workload situations (not necessarily the day-to-day workloads). Therefore, a good design always provisions hardware for future growth. However, this usually means growth of the entire infrastructure. When more compute power is needed and added, for example, storage capacity, network bandwidth and memory often grow with it, whether needed or not.

These systems, designed with what has been the common scale up approach, often remain underutilized. Provisioned hardware is not fully in use, and some systems sit idle for much of the time.

Now You’re in, Now You’re Out — How Architectures Evolve

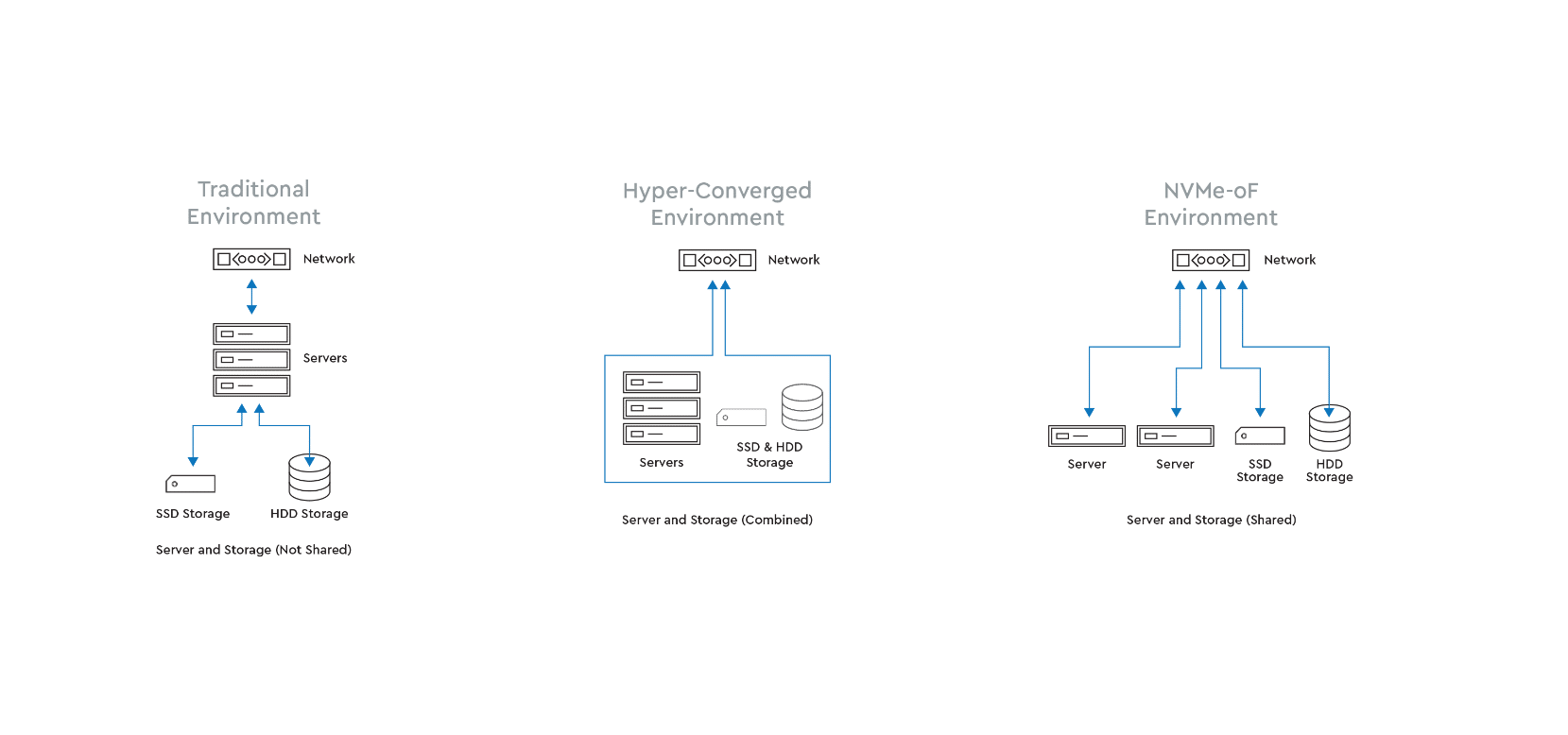

Today, scale out systems allow simultaneous scaling of compute, memory, and storage i.e. they all come together. Virtualized systems allow elastic pooling and partitioning of resources, while hyper-converged infrastructure (HCI) platforms have walked the extra mile and converted shared nothing DAS systems to shared everything architectures (Figure #1). While all that has increased flexibility and elasticity, it still does not address the question of independent scaling of resources.

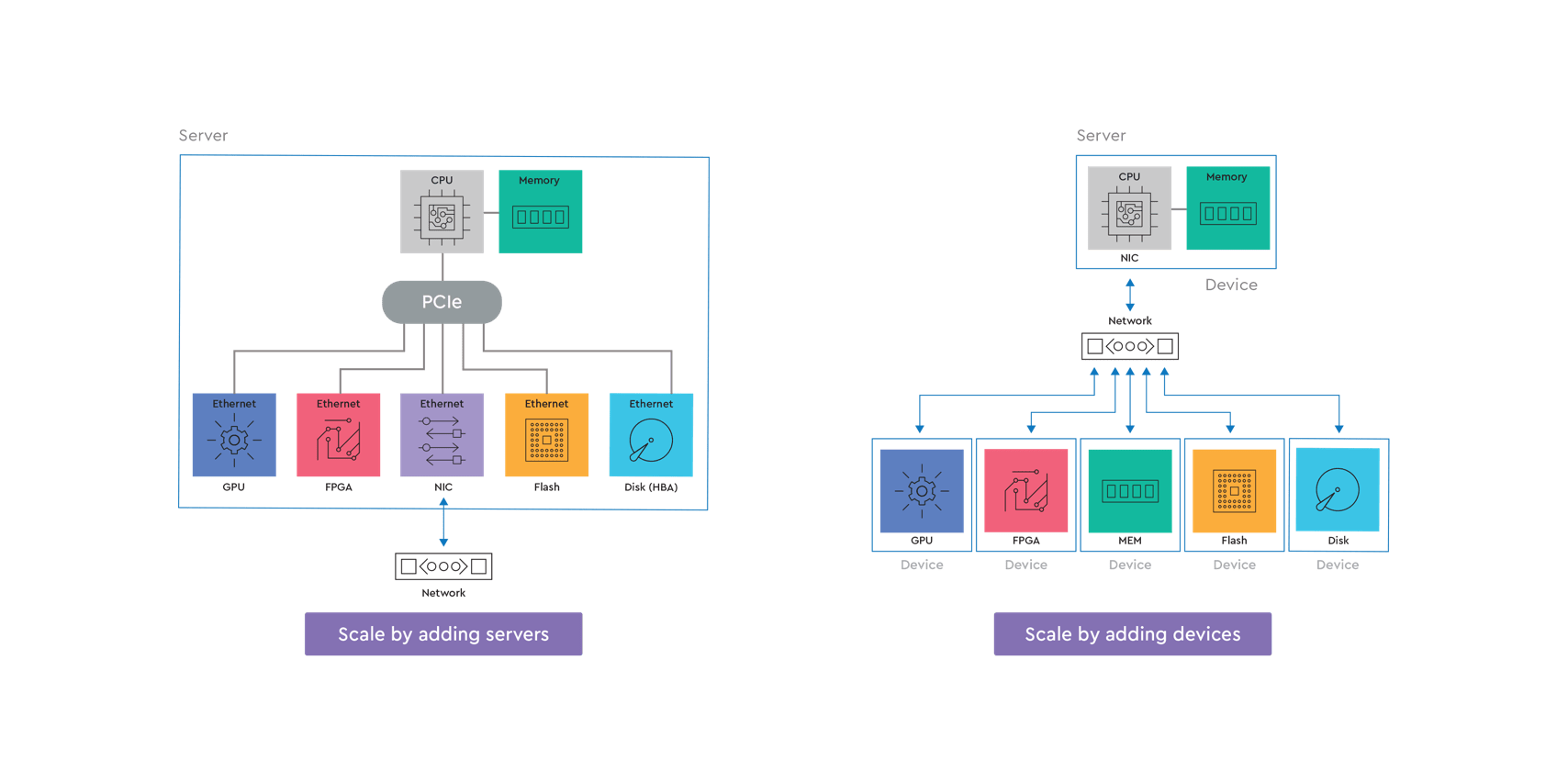

As we look towards building more efficient architectures to handle data growth and complexity, there’s a need to have more agile and scalable data infrastructure. Much like hyperscale data centers, future cloud and enterprise data centers will need independent scaling of three important elements- compute, memory, and storage.

Why do Fabrics Matter?

To solve independent scaling challenges, systems need to isolate compute, memory, and storage via some network. However, to ensure optimal performance, this network needs to be lean — without big overheads such as network hops or protocol latencies.

The concept of fabrics is not new in the world of storage systems. We have SCSI over Ethernet and SCSI over Fibre channel, which gained popularity because of higher speed options and lossless connections. However, with NVMe-oF, new disaggregated systems can both decouple compute and storage while enabling DAS like performance and shared data access with massive parallelism. Furthermore, fabrics-connected disaggregated systems can also further enhance the capability for system composability — the ability to quickly reconfigure and deploy hardware resources through software orchestration, whenever needed.

NVMe + Fabrics – The Next Step in the NVMe-oF Evolution

As systems look to meet the demands of both high performance as well as independently scalable, elastic, and flexible disaggregated architectures, there are many hardware and network configurations possible to achieve this.

The NVMe data transfer standard is both the protocol between the flash media and the storage controller, as well as between the host and the storage controller across the fabrics. The question is how to do this.

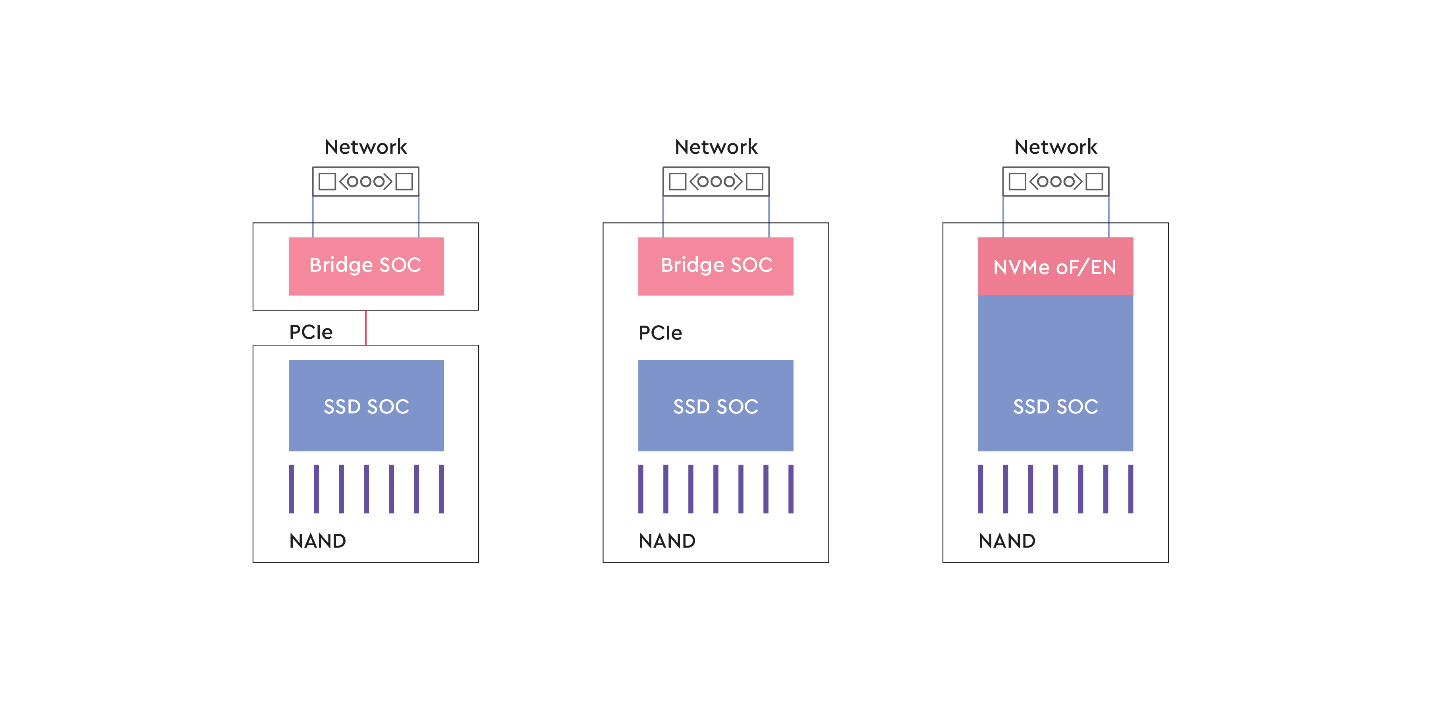

One way is to add fabrics connectivity to the storage devices (Figure #3). NVMe SSDs, when directly connected with fabrics such as PCIe, Ethernet, or Infiniband, can support low latency protocols and open the world of new disaggregated systems. However, this brings interesting technology questions:

- Which fabrics and protocols to choose?

- What does the external integration of fabrics and SSDs look like?

- Will we see rise to native fabrics-embedded SSD controllers?

As we look at different controller options we need to think of the role of SSDs, examine capacities as well as underlying storage media. For example, should fabrics-enabled SSDs be introduced? And if yes, will they require extra processing capabilities such as protocol conversion and flow control etc. in the fabrics or within the SSD controllers? What does this mean for the host software, data protection, and storage management?

As we explore these questions, we should also look into new architectural efficiencies, such as offloading some of the compute activities from system CPU to other available cores. How we integrate different dimensions of fabrics and cores with storage devices may open up a new paradigm of computing over NVMe-oF.

How Can You Enable NVMe-oF?

In my next blog, I will discuss how and when to enable native fabrics controllers and design an independently scalable, elastic, and flexible disaggregated composable infrastructure.

Helpful resources:

- Blog: 5 Reasons it’s Time to Consider NVMe-oF Today

- Case study: How Scientists Use NVMe-oF to Accelerate Research

- Ecosystem: The Open Composable Compatibility Lab

- Stream on demand: The Next Data Fabric: NVME-oF™