How a New Approach to Storage Server Cooling Can Save You Costs

It’s the life sign of the data center. You hear it as soon as you walk in. The (extremely loud) sound of the fans and cooling air being sucked into and blown out of the equipment. Cooling may not be the most glamorous subject of IT, but it is critical to data center design, operational cost and hardware reliability. What if there was a way to improve storage enclosure and storage server cooling, to reduce the energy consumption… and to reduce all that noise!

Rethinking Storage Server Cooling

As I shared in my previous post, we have been looking at storage enclosure and storage server design in a new way. The breadth of the Western Digital storage portfolio and our level of vertical integration (from the raw silicon and heads and disks, through the storage device and all the way to enclosures and systems) gives us unique insight into how every level of the system and components can be tuned to improve our products overall.

“We came up with innovation that approaches storage server cooling design very differently”

We could see our customers struggling with the challenges and the associated costs of cooling. Applying this approach to the relatively unglamorous subject of storage enclosure design allowed us to come up with real innovation that approaches cooling design very differently. This new design, called ArcticFlow™, improves cooling and reliability, reduces energy consumption and delivers real cost savings. Let’s take a look at how it works.

Hot and Cold Aisle – How Storage Server Cooling Works Today

Data centers typically have alternate cold and hot aisles, cool air comes from the cold aisle through the racks to cool the equipment. The warmed air then exits into the hot aisle where it is ducted away for chilling.

The most efficient data centers use very dense racks to improve the workload to rack ratio. Packing more equipment into the enclosures has challenges, especially for cooling. Cramming more devices into a box makes it harder to get sufficient cooling air over them to maintain ideal operating temperatures.

For example, a deeper enclosure front to back allows extra rows of drives to be added, but while the air drawn in from the cool aisle effectively cools the front few rows, by the time it reaches the back rows, the air is already warm. With this type of design the fans have to run harder (and louder). There may even be a need for additional fans, or blowers, on the front to push more air in. And the drives at the back still get hot.

Introducing ArcticFlow

Our Silicon to Systems Design approach helped us think differently about this problem. Here’s how one of our storage platforms is designed differently:

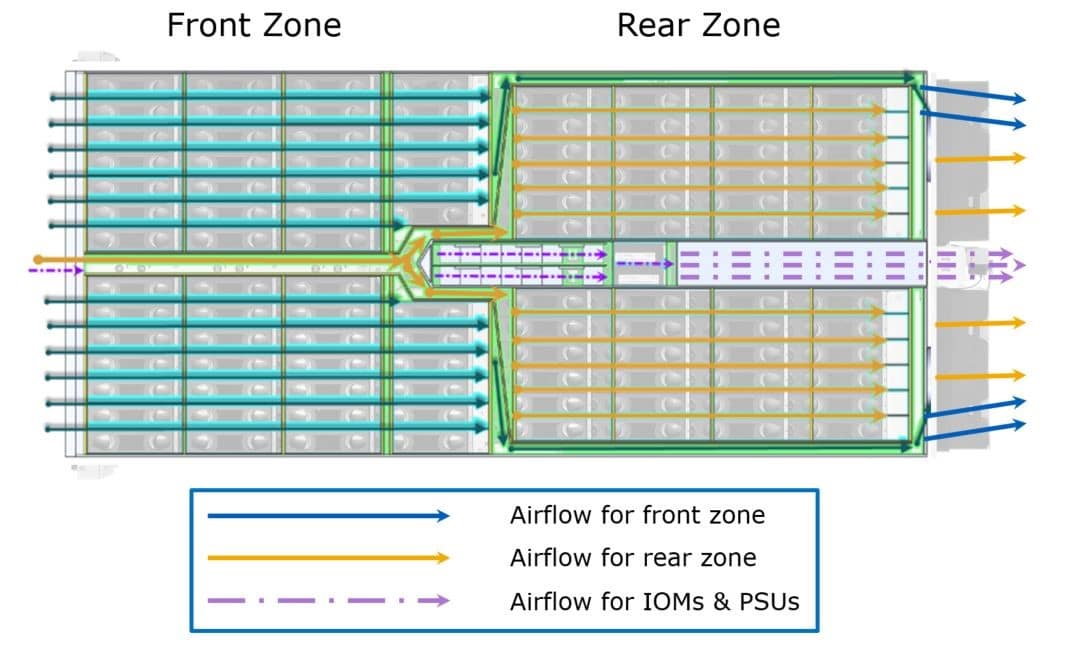

The Ultrastar® Data102 Hybrid Storage Platform. This platform has eight rows of drives, which we divided into two zones, each with four rows of drives. Like traditional design, cool air is drawn into the front zone from the cold aisle, yet instead of being drawn over the rear zone, the air is then ducted around the sides of the enclosure and exhausted to the hot aisle at the rear.

As you’ll see in the image above, a cool air tunnel brings cold air directly into the center of the enclosure to cool the rear zone. We actually give up a few drives worth of space to create this, but it’s well worth it for the benefits.

Changing the Cost and Reliability Equation

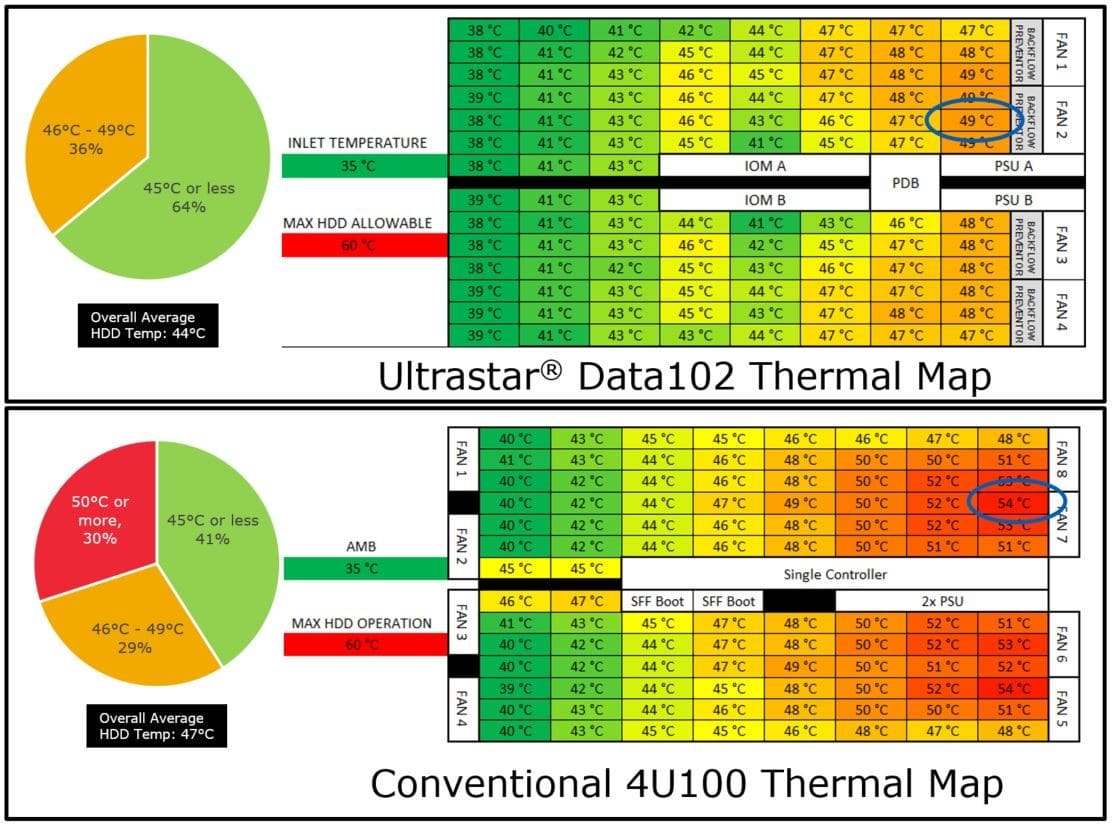

Competitive testing showed a significant difference in the thermal profile inside the enclosure: 30% of the drives in the Ultrastar Data102 ran an average of 13°C cooler. Even if they are running within their specified temperature range, disk drives and SSDs (like most electronic devices) are generally more reliable when operating in the lower ranges of the specification.

Using an Arrhenius model for reliability de-rating, that 30% of drives running at elevated temperatures are 44% more likely to fail. So, based on this model the comparable enclosure without ArcticFlow is likely to have 13% more drive failures over its life. This is just part of the reason we can offer a 5-year limited warranty on the entire box.

“A cooler operating environment using just over half of the cooling power per slot”

We are able to maintain a cooler operating environment inside the enclosure while using just over half of the cooling power per slot required for a competitive system. This translates to real energy savings that really mount up. Using California energy rates, a large data center with 20,000 drives could save as much as $300,000 over a typical five-year lifecycle.

A Silicon to Systems Design approach helps us even more because of the interaction of technologies. You’ll remember from part one that IsoVibe™ technology reduces vibration of the drives by up to 63%. The slower fan speeds enabled by ArcticFlow simply build on that by reducing fan vibration. It all works together beautifully to deliver better, more efficient and reliable solutions. And, we can even cut some of that data center noise.

Understand How Storage Enclosures Influence Performance, Reliability and Cost

Join me, or stream my upcoming webinar to learn more about today’s density challenges at scale, how storage enclosures influence performance, reliability and cost, and why holistic engineering – from Silicon to Systems – is important and how it drives innovation in the data center.

Watch or stream here: https://www.brighttalk.com/webcast/12587/358428