Why Cold Storage Is So Hot Right Now

Baseball is back. And with the return of a new season comes hours of content: player statistics, team records, video footage of every play in every game from multiple camera angles in stadiums across the globe.

In fact, MLB Network uploads up to 50 TB of new content per day, and even more in its archives. In order to deliver timely data during games, in daily highlights, and in future coverage, data management teams must decide where to store hot, warm, or cold data depending on how quickly and how often they need to access it.

Whether it’s sports statistics, healthcare, compliance, or historical data, more data is generated daily than can be analyzed, and the rate continues to grow.

Industry experts estimate that data is growing at approximately 30% annually and could generate as much as 175 ZB by 2025. Though not all data sets needs to be analyzed right away, they still be important to keep. That’s where cold storage comes in.

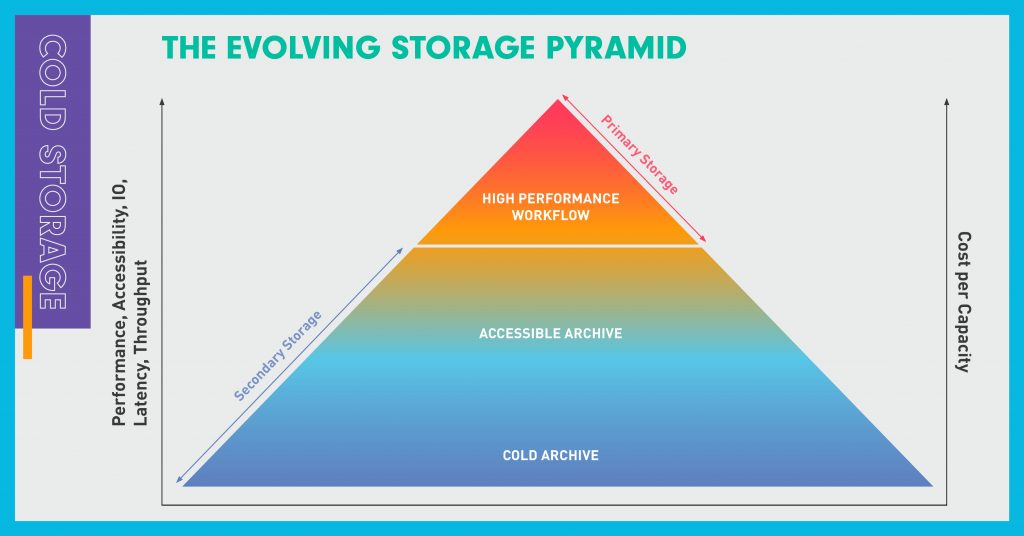

Cold storage retains any data that is not actively in use. Data can be stored in archives — or “cold” — lower cost, infrequently accessed storage tiers as opposed to live, “hot” production data, such as financial transactions that need to be accessed immediately.

According to industry analysts, 60% or more of stored data can be archived or stored in cooler storage tiers until it’s needed.

“The world is generating and storing more archival data than ever before. That’s why cold storage is the fastest growing segment in storage,” said Steffen Hellmold, vice president of Corporate Strategic Initiatives at Western Digital. “There’s a major disruption underway. As more and more bits are stored, cloud providers are reinventing their architectures with accessible archives to manage all that data.”

Why go cold?

As we enter the Zettabyte Age, the more data is stored, the more it costs. The largest pools of data are typically unstructured or semi-structured data, such as video footage, genomics data, or data used to train machine learning and AI use cases. Cold, or secondary, storage is less expensive than hot, or primary, storage. It makes sense to store data that is not actively needed in pools of cooler storage at a lower cost.

“The biggest consideration is how frequently you need to access the data, or how readily available you want it to be when do need it,” said Mark Pastor, director of Platform Product Management at Western Digital. Today’s cloud storage service SLAs are based on how often data needs to be accessed and how long a customer is willing to wait. For some cloud providers, data stored in a cooler tier might take five to 12 hours to access, whereas nearline data is stored in a warmer tier and available immediately but at a price.

“Aside from cost and accessibility, the third factor is a psychological one. It almost goes against human nature to delete anything in case you might need it sometime down the line,” said Kurt Chan, vice president of Platforms at Western Digital. “You never know what data is going to be valuable later on.”

Cold storage options to date

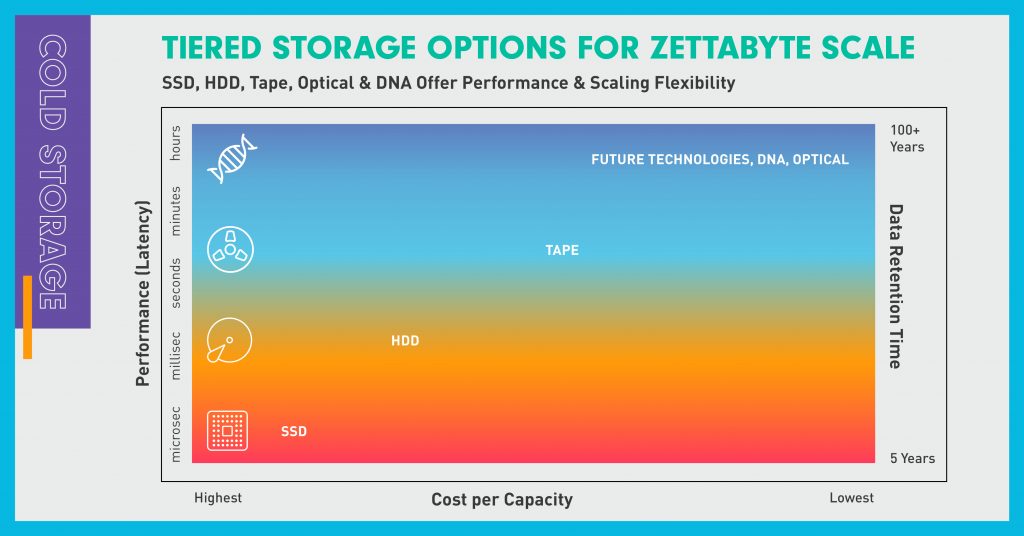

Until now, most secondary (cold) storage has been contained on either tape or hard disk drives (HDDs), with hot data moving to solid-state drives (SSDs). Western Digital supplies SSDs, HDDs, and tape heads, but sees secondary storage growing even faster than primary storage. According to Horison Information Strategies, today at least 60% of all digital data can be classified as archival, and it could reach 80% or more by 2025, making it by far the largest and fastest growing storage class while presenting the next great storage challenge.

Tape is less expensive than HDDs, but has much higher data access latency so it is a good option for cold data storage. If the value of data is related to the ability to access and mine it, there’s an order of magnitude difference between storing it on disk versus tape.

In other words, data accessibility increases data value.

HDDs are evolving to next-generation disk technologies and platforms that enable both better TCO and accessibility for active archive solutions. Advancements in HDD technology include new data placement technologies (i.e. zoning), higher areal densities, mechanical innovations, intelligent data storage, and new materials innovations.

Future cold storage technologies

Hyperscalers, who house the largest pools of data, are looking for the most cost-effective ways to store the ever-increasing amount of data. Thus, new tiers are emerging for cold storage with IT organizations reinventing their archival storage architectures.

With long-term data storage moving to the century-scale mark – data that needs to be stored for 100 years or more – new cold storage solutions are in development, including DNA, optical, and even undersea deep-freeze storage.

In November last year, Western Digital — in partnership with Twist Bioscience — Illumina and Microsoft as founding members, announced the DNA Data Storage Alliance to advance the field of DNA data storage. Due to its high density, DNA has the capacity to pack large amounts of information into a small space. DNA can also last for thousands of years, making it an attractive medium for archival storage.

As the data generation continues growing at incredible volumes, cold storage will prove integral to preserving data at an affordable price and with longevity. Storage innovators are creating long-term data storage solutions that make valuable data accessible both in the near-term and for generations to come.