Think Big! Why Flash Isn’t (Just) About Performance

[Tweet “Flash is fast. But here’s what you didn’t know #datacenter”]

In part I of this blog series, I wrote about what sets consumer and enterprise flash devices apart from one another. But there are several misconceptions about flash that I would like to clear up.

One particular myth about enterprise flash is that it’s only good for performance-centric solutions. Flash has become a mainstay in solutions that need acceleration from higher performance storage devices. In some cases, flash is used as a cache to accelerate back-end storage comprised of slower HDDs. In other cases, HDD storage pools have been replaced by flash all together, or a flash tier is added in front of the HDDs.

While the use of flash as a performance tool has become understood and accepted, some might overlook flash as an option because they can get the performance they need with HDDs. On frequent occasions I will have a customer state “I haven’t considered flash for this solution because the HDDs meet my performance needs”.

Flash can be used to provide so much more than performance. You might be missing out on a number of subtle benefits if you think only in terms of performance.

Consolidation is Your Friend

Stop simply comparing the cost per gigabyte of one device versus another. The “solution cost per gigabyte” is what’s important. No workload is just about performance or capacity. Remember you’re paying for every piece of hardware and all that’s needed for its connectivity, operation and management. Consolidation is your friend.

I’ve written about this before. Low-cost NAND devices with large capacity points can enable SSDs to help consolidate datacenter hardware. Shrinking the hardware footprint allows for removal of enclosures and other support infrastructure which changes overall solution cost per gigabyte to a larger extent than simple device-to-device comparisons will illustrate.

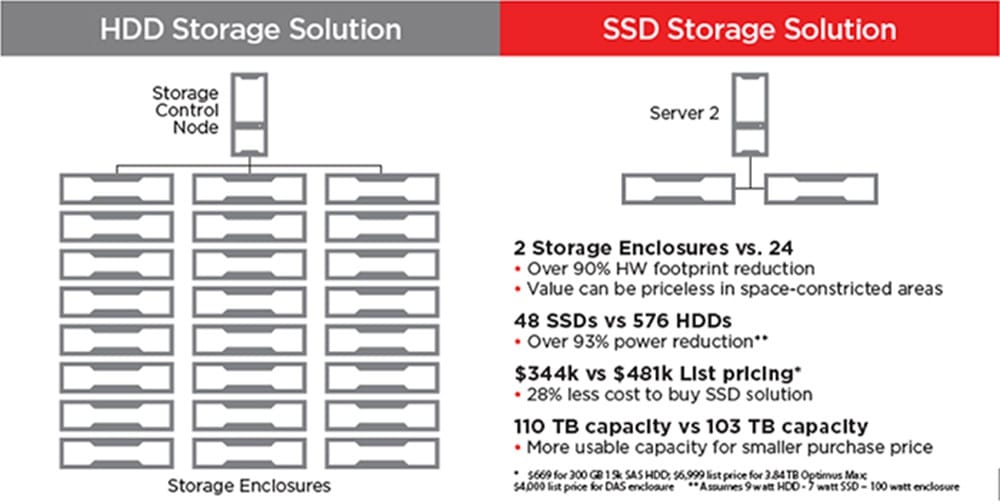

The mileage on this type of consolidation will vary based on ratio of large block streaming versus small block randomness of your data as well as read/write ratios. The ability of enclosure connectivity can affect the consolidation amount as well. I’ve shown this example of how a 100TB dataset accessing small block data could see a

- 90% hardware footprint reduction

- 93% less power consumption

- 28% solution cost reduction!

Rethink Your RAID configuration

SSDs, depending on the device chosen, are anywhere from 10x to 10,000x less likely to exhibit an uncorrectable bit error versus an enterprise HDD (and you can read more about this in my previous blog). This can be advantageous not only for better protection of data, but also to allow configuration changes based on this protection margin.

To give you a real world example, the vast majority of solutions that employ the use of RAID 6 do it for one reason: if one loses a drive in a RAID 5 array, an uncorrectable bit error could occur during the rebuild and with no “2 drive” level of protection, any uncorrectable bit failure on a remaining device still live in the array and ultimately results in data loss.

Uncorrectable Bit Error Rates, usually expressed as UBER, at the level of SanDisk® devices (as described in my previous blog) may allow for the decision to be made to reduce the RAID configuration from a RAID 6 to a RAID 5. This change has several massive advantages: it saves considerable performance AND capacity for application usage. In other cases the user may decide they don’t need RAID at all and move to a frequent snapshot method for backing up data. Other customers may not change anything in their configuration when moving to these SanDisk devices but simply enjoy a higher level of protection from uncorrectable bit failures. Even those customers who don’t employ any type of RAID for data protection can take advantage of this feature, because the chances of an uncorrectable bit error forcing data to be pulled from a backup copy is substantially less than some other solutions.

Of course, we can’t discuss UBER without also mentioning the annual failure rate (AFR) of SSDs in general. SSDs have no moving parts, so mechanical failure has been removed from the equation when building a new storage solution. A quick search of annual failure rates of SSDs versus HDDs will immediately lead to a number of reputable sources that have shown SSDs to have a much lower AFR than HDDs.

Beyond SAN – New Infrastructures at Lower Costs

Software-defined storage in its current form usually involves the building of a storage enclosure using an x86 server. By following this method we have the server stand in for the enclosure, but it also happens to have memory, compute, and PCIe capabilities locally as well. Essentially, this is a rather philosophical movement out of the SAN space. We can finally ask ourselves: Why do we use a SAN? For the most part, the answer splits into three different categories. We want to share files, the server spindle count won’t give the needed performance, or the server doesn’t hold the capacity point needed.

In the event of sharing files, of course a SAN is one path to follow, but there are a number of software-defined storage targets that allow file sharing by wrapping software around a server and creating a virtual SAN. For example, the SanDisk ION Accelerator™ platform used with Fusion ioMemory PCIe cards in a server can create a standalone or high availability 2-node shared target solution. This is as much a statement of capacity as it is performance of the Fusion ioMemory PCIe flash cards. Other industry solutions exist as well,- of course with a variety of different needs targeted in each, but all of them are designed to deliver your specific needs at “lower than SAN” cost. It’s fair to say for completeness, that most current software-defined shared targets built from servers will require the user to make some tradeoffs on existing SAN management functionality as well.

As for the capacity points per server as a reason to use a SAN, we can now reach capacity points in a server unheard of just a few years ago. 5 years ago, if a user needed 90TB of capacity per server, they were forced to use a SAN or NAS or attach a DAS enclosure to the server even if no file sharing was occurring. With the current 3.84TB enterprise SSD built by SanDisk, the user can now put 96TB inside the server and skip the cost of the SAN and additional hardware footprint of a DAS enclosure. Don’t buy SAN, NAS, or DAS enclosures you don’t need!

As for performance limitations due to a lack of spindle count inside of a single server, we have a solution for this as well. Instead of going to an expensive SAN with a large hardware footprint, and short stroking drives, and/or spreading applications over large numbers of HDDs and utilizing the remaining capacity for low-performance solutions, we propose a simpler, more cost-effective solution. Use an enterprise SSD, which has much more performance than an HDD in all measurable parameters, and simply remove the need to leave the server for your storage needs. A SAN can be used to get capacity performance levels where you need them, but not always at the most efficient price point. Large capacity enterprise SSDs can meet capacity, performance and cost needs in a simpler, smaller configuration.

Sure, flash delivers blazing speeds, but it also provides superior reliability and greater densities that deliver infrastructure that is easier to manage at a lower cost. Rethink what you can do with flash in your data center!

I’ll be talking on more flash myths in my next blog. Feel free to leave your comments and questions below or join me for this upcoming webinar:

[WEBINAR] The Bigger Picture: Flash Storage is More than Just Performance

June 22, 2016 at 8:00 am PT or after on demand

In this webinar I will explain how flash can be used for more than just performance, and show examples of how flash can:

- Lower hardware footprint by 98%!

- Reduce RAID configurations while improving application performance and capacity

- Avoid overspending in storage (SAN, NAS, DAS) that’s not needed

Register here! I look forward to seeing you there.