IoT: Context Lives at the Edge

The rising popularity of edge and fog computing in recent years is enabling more informed decisions. A big reason is because analytics gives context to the deluge of content living on edge devices.

Just as the cloud gives a macro view of the world, the edge provides a micro view. To garner the micro view, raw content from the constant inflow of data from sensors and such has to be readily turned into information or context.

Technologies such as Artificial Intelligence (AI), Machine Learning (ML), image recognition and more, when pushed onto edge devices, interact with real-time data to give us this context.

With context, we gain quick and actionable insights to immediate environmental stimuli. Access to this kind of information ultimately creates a more efficient and effective environment.

Imagine using your smartphone to take a picture of a bottle of wine in a grocery store. In the past, your phone might provide a geolocation tag or a timestamp for that image, but that’s about it. But now with more analytics on your phone and more processing power at the edge, you get more relevant information. Your phone might provide ratings from popular wine publications or suggested food pairings. You might even get price comparisons from nearby stores or where to purchase the ideal accompanying steak because your phone knows where you are. That’s the power of context gleamed from adding more processing to devices at the edge.

What if these real-time analytical tools were running on home surveillance cameras? With surveillance, of course when someone rings your door bell, you could determine whether it’s someone you know – such as a gardener or a babysitter – before you open the door. But by adding facial recognition software, the technology could verify that a delivery truck driver is truly an employee of that company by tapping into an employee database. Conversely, the software could tell you that the person is unrecognized, which might prompt you to open the door with more caution, if at all.

You can’t gain these kinds of real-time, contextual insights if your data resides exclusively in the cloud. The mechanisms for saving, storing, and retrieving data from the cloud introduces too much lag. It simply takes too long to store and forward data whose value exists in the “now” timeframe. Real-time analytical tools need to work with real-time data, and the best place to do that is where the real-time data sits – on the edge.

[TWEET “Edge devices – not the cloud – put content into context.”]

Another consideration is in the automotive industry. With connected cars or fully autonomous vehicles, proximity sensors are critical to identify impending danger and play a key part in autonomous driving.

These vehicle’s proximity sensor data however may never be pushed to the cloud because it can’t afford the lag time. When your vehicle is too close to an object for comfort, I’m sure you would agree that the analysis needs to be in real-time, on real-time data, in the car itself for a real-time response. Once the journey is complete however, the proximity data may no longer be necessary (since the environment has changed) and that data could be even flushed from memory once it’s past the stage of usefulness.

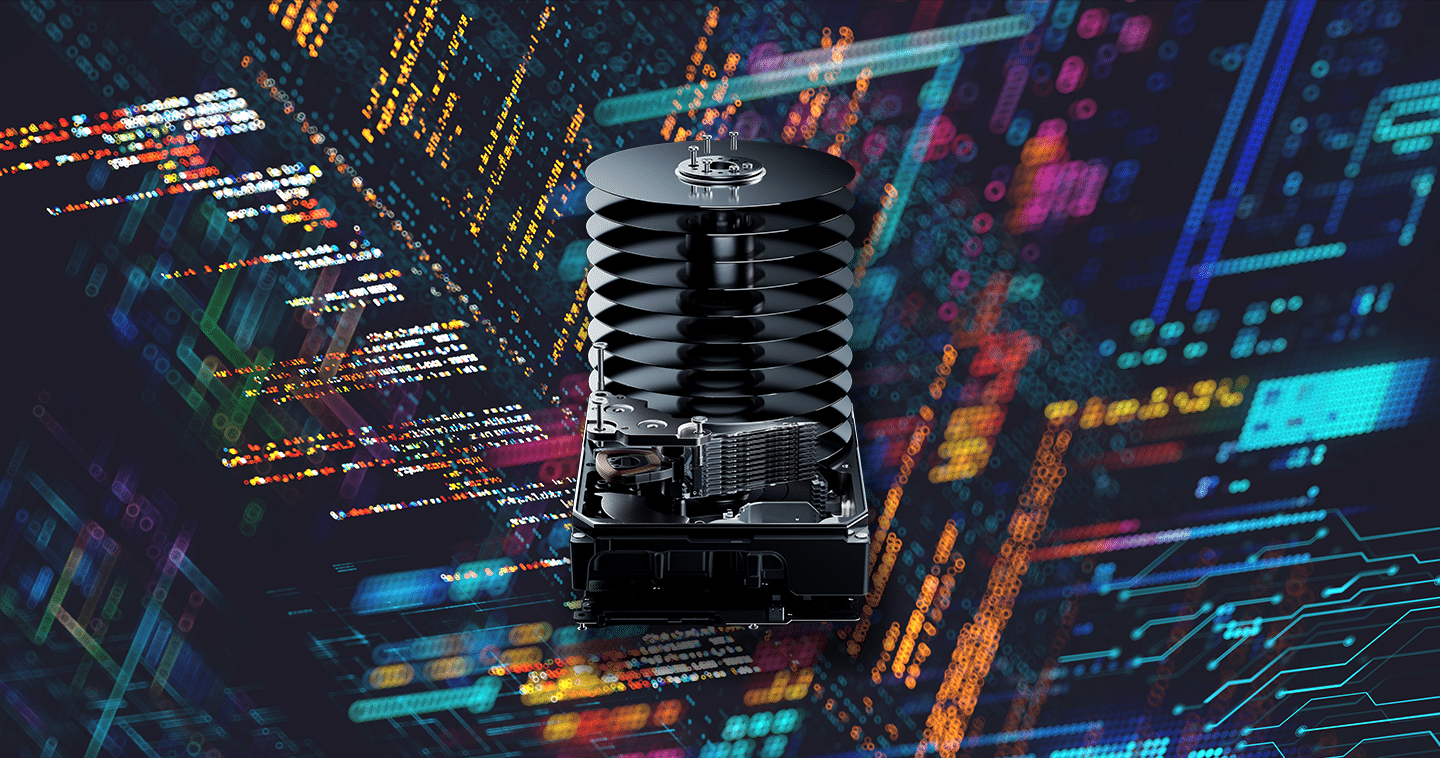

To truly take advantage of actionable insights and real-time response on edge devices, both reliable, resilient storage and powerful analytical tools will need to reside at the edge. This will help turn content into context and passive decisions into informed decisions where timeliness is critical (particularly if your autonomous car is navigating through traffic for the steak that goes best with that bottle of wine!)

Chris Bergey, VP of Embedded Solutions at Western Digital, speaks more about putting content into context at Compuforum 2017.