A New Approach! All-Flash Storage Solution for SAP HANA

SAP HANA is an example of in-memory computing in which the current working dataset is held in the main system memory. In an example like this, it is easy to have the mistaken belief that there is no value concerning yourself with backend storage with respect to making a better in-memory database (IMDB). Yet storage is the cornerstone for persistence in pretty much any and all databases, including those that run in memory.

Keeping data in main memory means the data is not persistent. One of the core requirements for an enterprise database is durability, so a level of protection must be put in place for this configuration. This is where new cost and capacity points of enterprise flash can allow for real change to be enacted while keeping the original IMDB instance completely intact and unchanged.

[Tweet “Less hardware = more database. A new approach to #SAP #HANA with #allflash “]

The First Method – SSDs as a Logging Tier

The primary method to provide this needed durability is to utilize a persistent log that is stored on persistent storage media, coupled with saved transaction pages to allow for system recovery in case of a failure. Each committed transaction generates a log entry that is written to non-volatile storage, which ensures that all transactions are permanent.

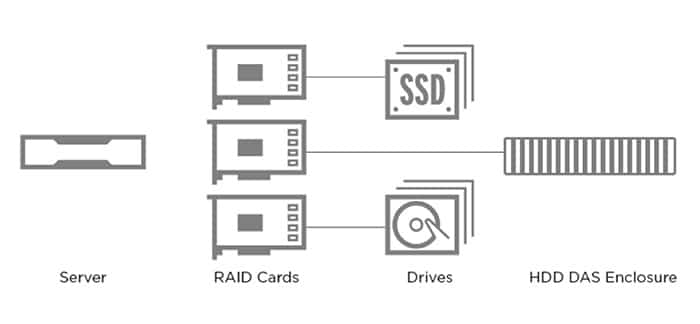

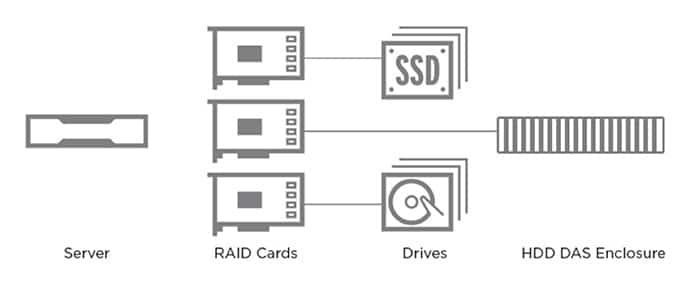

For this discussion I will specifically refer to the Lenovo DAS-based SAP HANA solution, a real world example to illustrate a solution that can be built today based around a 6TB IMDB.

To obtain the needed performance from the persistent storage, transaction logs can be placed on enterprise SSDs, while saved transaction pages are placed on 10K RPM HDDs. Both tiers would each be protected by RAID or some other method of data protection. The enterprise SSDs used for logging require a dedicated RAID controller, and the HDD-based persistent storage will require another RAID controller.

If the in-memory database size is smaller, then the server will have sufficient drive bays to hold all of the HDDs needed to provide persistent storage for the transaction pages. However, if the in-memory database is larger, the server that houses the database may not have enough drive bays to hold all of the HDDs needed to provide the persistent storage for these saved transaction pages. In this case, an external drive enclosure, also known as direct attached storage or DAS, will have to be attached to the server running the in-memory database.

Attaching an external DAS enclosure requires another RAID controller. This occurs because the RAID controllers currently being used inside of the server have internal connection points only and the DAS enclosure requires an external connection. Large configurations that require an external DAS enclosure need a minimum of three (3) RAID controllers, including one for each DAS enclosure. Additionally, both internal and external SAS cables are required to connect these controllers to the SSD/HDD drives and external enclosures.

Another Storage Method – SSDs as the Caching Tier

Another deployment configuration methodology is to store both the logging and saved transaction pages on the same 10K RPM HDD media, and a persistent enterprise SSD-based cache is used to accelerate the persistent tier. This behaves in much the same way as the first example, since all log entries are written to both the cache and the backend storage, but it allows the system to move forward as soon as the persistent cache has committed the log entry.

The hardware required to build this configuration is similar to the example above. This solution requires a RAID caching feature to be added to the installation. The same hardware is needed, but the configuration is slightly different in that the SSD storage capacity doesn’t increase overall storage capacity.

Complexity is Your Problem

There are limitations in both examples described above – which can be virtually eliminated by moving to an all-flash solution, which I’ll get to in just a moment.

The caching solution doesn’t allow for the SSD capacity to be utilized as actual usable capacity. As a result, more HDDs must be purchased, and the added complexity of a caching layer must be added. The use of both HDDs and SSDs in the solution increases the number of attach points, which increases the number of RAID controllers needed.

Utilizing SSDs as a logging tier in front of an HDD-based saved-transaction-pages-tier creates un-needed complexity. The solution no longer requires caching software, but the faster, more expensive logging tier now has to be large enough to encompass the entire logging set, since the results won’t be held in a much larger monolithic dataset but rather a standalone storage tier.

A Better Way

By using an all-flash solution, we can dramatically simplify the SAP HANA storage components and accomplish the same thing. Here are two visual diagrams comparing the same Lenovo DAS based SAP HANA solution with a 6 TB IMDB:

As you can see, there are a lot of parts needed to make this happen with many different components and attach points that need to be managed and fail-proofed.

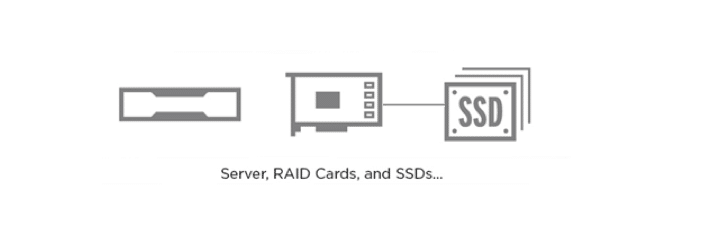

With the use of a single tier of high-capacity SSDs, we can remove the need for multiple RAID controllers and the need for an external DAS enclosure:

From an SAP HANA perspective, this looks logically identical to the original configuration. SAP HANA has at its disposal enough persistent storage to handle both logging and saved transaction pages, while having more than enough performance so that save, load, and logging times are unaffected and are actually even better than they were previously.

Less Hardware, Less Failure, Less Power = More Database

The obvious question that comes from the fact that nothing changed logically is, why bother to do this? The answer is simple and straightforward. Simplifying the hardware design as described above does much more than reduce the parts count of the solution.

The physical footprint of this particular solution is reduced, in this case by 20%, which of course boosts the amount of database capacity a user can place per rack. The parts that have been removed from the solution are active components that consume power while also assuming positions as “points of failure” in the overall solution. The power reduction is substantial. An all-flash solution as described here has a power reduction of 82% for the storage subsystem.

Storage device count drops from 36 to 11, and a number of other points of failure such as the external DAS enclosure and associated cables are all removed as potential failure points. Couple this with the ability to have a denser SAP HANA solution add up to a solution that’s logically identical to what you currently run – all while reducing points of failure. This adds up to a solution that’s simply better for the end user.

With newer, high-capacity enterprise SSDs of moderate performance and reasonable cost, we can make substantial changes in how an SAP HANA configuration is physically built, while keeping it logically the same. Simplification and consolidation can occur. Instead of having a high-performance logging tier and a slower-performing saved transaction page tier, we use a single tier of moderate performance flash media achieving better results for less!

Performance, Economics, and Reliability

I recently joined our partners at Lenovo for a webinar about a new high-density SAP HANA solution and how moving to an all-flash solution enables significant cost savings, efficiency gains, and removes complexity.

You can stream the webinar here to learn how embracing an all-flash solution provides superior durability, better data protection, efficient use of existing capacity, and performance gains that will transform your business-critical applications.

We recently also published a white paper on this solution which you can download here.

I welcome your questions in the comments below and look forward to hearing from you!