In the last few years we’ve seen a rapid rise in the quality of images produced by smartphones, which has occurred in tandem with a growing desire by consumers to capture, store and share their lives in photos and video clips. Mobile devices still lag behind more advanced traditional cameras because of the physical limitations of size and weight: in the end, mobile devices need to be small and portable. These limits mean the sensors cannot be very large and the Z-height, namely the thickness of the sensor and lens array, cannot be very thick.

The Issue With Small

The biggest losers in this small space war are low light performance and lens quality. With nowhere to put a zoom lens, or even build in a traditional aperture, giving users control of framing and depth of field is severely hampered. Equally, with such small sensors, the pixels are less efficient at gathering light so nighttime images suffer from image noise. This is compounded by the increase in resolution—despite the physical sensor size within the camera staying roughly the same, the resolutions have increased making those already small pixels smaller still.

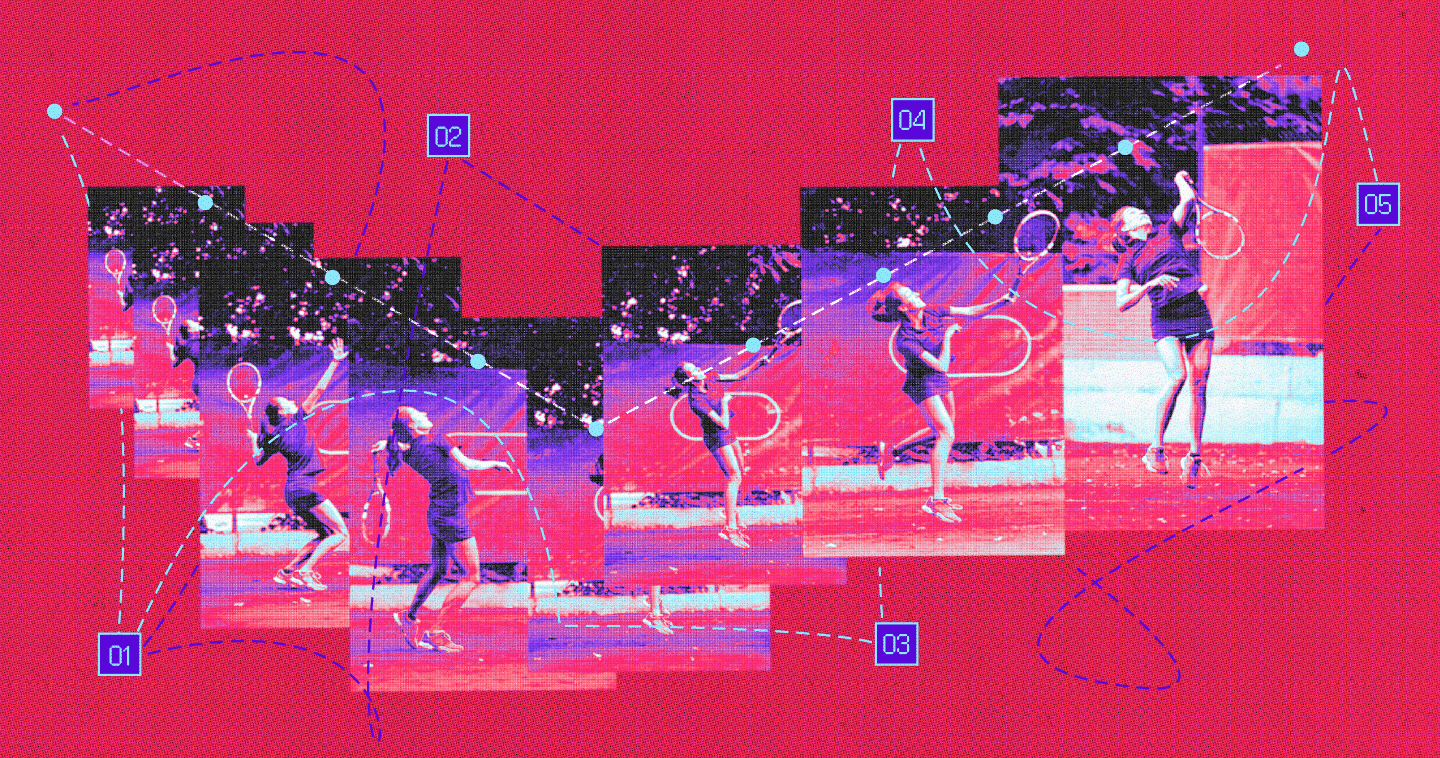

With no chance to use traditional imaging technology to over the problems, smartphone manufacturers are now heavily investigating computational photography. In simple terms, computational photography involves combining multiple images through advanced software algorithms to improve image quality and overcome the limitations of a smaller sensor.

Computational Photography Origins

The concept of computational photography is credited to a 1936 paper written by Arun Gershun when the concept was called light fields. Since then there has been continued research into the use of light field imaging: the Lytro camera is a consumer version of a light field camera. What these cameras do is to capture not just light information but also spatial information about objects within the scene. This is achieved by using an array of cameras rather than a single sensor, so the relative position of objects in the scene can be mapped accurately. In this way the image can be manipulated to, for example, adjust zoom or focus.

Capturing all of this data is not without its challenges. To achieve an image with sufficient resolution to satisfy consumers, the data requirements for multiple images are quite high, both in terms of actual data to store and also with regards to speed. If the computational photography images cannot be written rapidly enough, mobile devices will slow done and consumers will have a bad experience, leading to slower take up of the technologies. SanDisk, drawing on their extensive imaging experience, have two solutions. iNAND 7232 is embedded memory providing fast sequential write speed to keep devices responsive, while the Extreme Pro microSD cards provide additional high capacity storage in a small, removable form factorthat features data transfer speed of up to 95MB/sec.

New Ways to View the World

The ultimate benefits, though, are richer images—and new types of images—in a wider variety of devices. With several image sensors working together, a scene can be captured multiple times at different exposures, with each of the resultant images then being merged into one final image which displays better tonal range or lower image noise.

Beyond simply improving image quality or allowing the focus or depth of field to be adjusted post-capture, computational photography can also be used to add new features to cameras. Project Tango from Google is a great example that provides 3D mapping capabilities in a mobile device form factor.