On Building AI Storage Systems

It’s not uncommon to hear people describe AI as the next era of computing, as the most significant change since the mainframe, and even as the beginning of an industrial revolution. How would you describe it?

I wouldn’t disagree with any of those statements. AI is a transformative technology and it’s poised to redefine multiple aspects of industries, economy, and society. So in that context, it’s a revolution.

The field is moving very quickly. While we are not at a point of reaching Artificial General Intelligence (AGI) yet, the big debate is how close are we? How long will it take? And how far do we go before effective AI ethics and regulation checks and balances come in so that society can regulate what really is a frontier technology; one with a global-scale race to the top.

Why now? What made AI suddenly possible?

AI has been around for many years, on the fringes if you like, since the 1950s, albeit with varying degrees of progress, caused by disinterest, funding stagnation, overhyped expectations, and limited compute.

The enabler behind the recent public’s awareness of AI, through the availability of LLMs such as ChatGPT, is the advancement in GPU technology and its availability via cloud computing. Open source frameworks, training data availability —enabled by the advancements in data storage technology—and massive investment is what is fueling AI, and its potential becomes further realized.

How much is AI reshaping the fundamental design of data centers?

Significantly. On the whole, there’s a rapid rise in demand for specialized infrastructure—GPUs, TPUs, ASICs, and so on. We need that compute capability to accelerate model learning. But that learning has to be supported by data, which can be vast, and therefore requires high-capacity, high-performance storage. That means server architectures are advancing rapidly and, of course, the network—moving data at high throughput and low latency is critical.

In response to these expansive demands, data centers are needing to advance their power and cooling efficiencies, which is why we see liquid cooling becoming more prominent; recent trade shows are evidence of this. The sheer heat generation and cooling requirements of these models mean that in many instances, we have no choice but to move to liquid cooling, particularly at the larger LLM scales.

How are companies architecting for AI workloads? What is the entry point?

If we look at it holistically, the entry point could start with just one GPU, one server, and some drives. The next thing you’re looking at is the learning model and what type of GPU, TPU, ASIC, or FPGA is it designed to work best with. That’s assuming your company is only looking at one model, which isn’t necessarily realistic.

The size of the training data is another crucial factor—how much data are we going to store? Is it proprietary, public data, or both? What’s the quality of that data? It must be cleaned, normalized, and stripped of biases and inaccuracies. The number of GPUs you assign to the learning task will, in part, define how long that training takes. But it’s also influenced by the complexity of the training and the model framework itself.

There is also much more to consider than the initial training cycle. Thereafter, you have inference training, model tuning, and the need to confirm how that model is working over time—is it starting to degrade or hallucinate? And then there’s the scale. How much do we scale that model? And, of course, software—from the infrastructure firmware and drivers, model frameworks, and supporting tools that exist in the function of ML Operations.

Building an AI cluster is a significant investment and Total Cost of Ownership (TCO) is closely tied to GPU utilization. Can you share insights from your work in this area?

When you think about how capable GPUs are at ingesting data, if we’re not supplying a GPU that data at its throughput potential, then we’re not getting the return-on-investment that could be realized. So we are duty-bound to drive those GPUs as hard as possible.

To ensure the GPUs aren’t starved of data at any point, we need to understand the internal design of the server. For example, the PCI bus architecture, how many PCI lanes it has, how they are switched, is there oversubscription, or whether data paths traverse the CPU complex. Does the server allow for complementary technologies such as NVIDIA GPUDirect?

Drive technology, and how we make those drives available, plays an important role here.

Essentially, we need NVMe drives with their high performance to complement the GPU’s capabilities. There is a ratio requirement of drives to GPU to ensure adequate throughput. The aim here is to get as close to the theoretical throughput of the PCIe bus that the GPU supports.

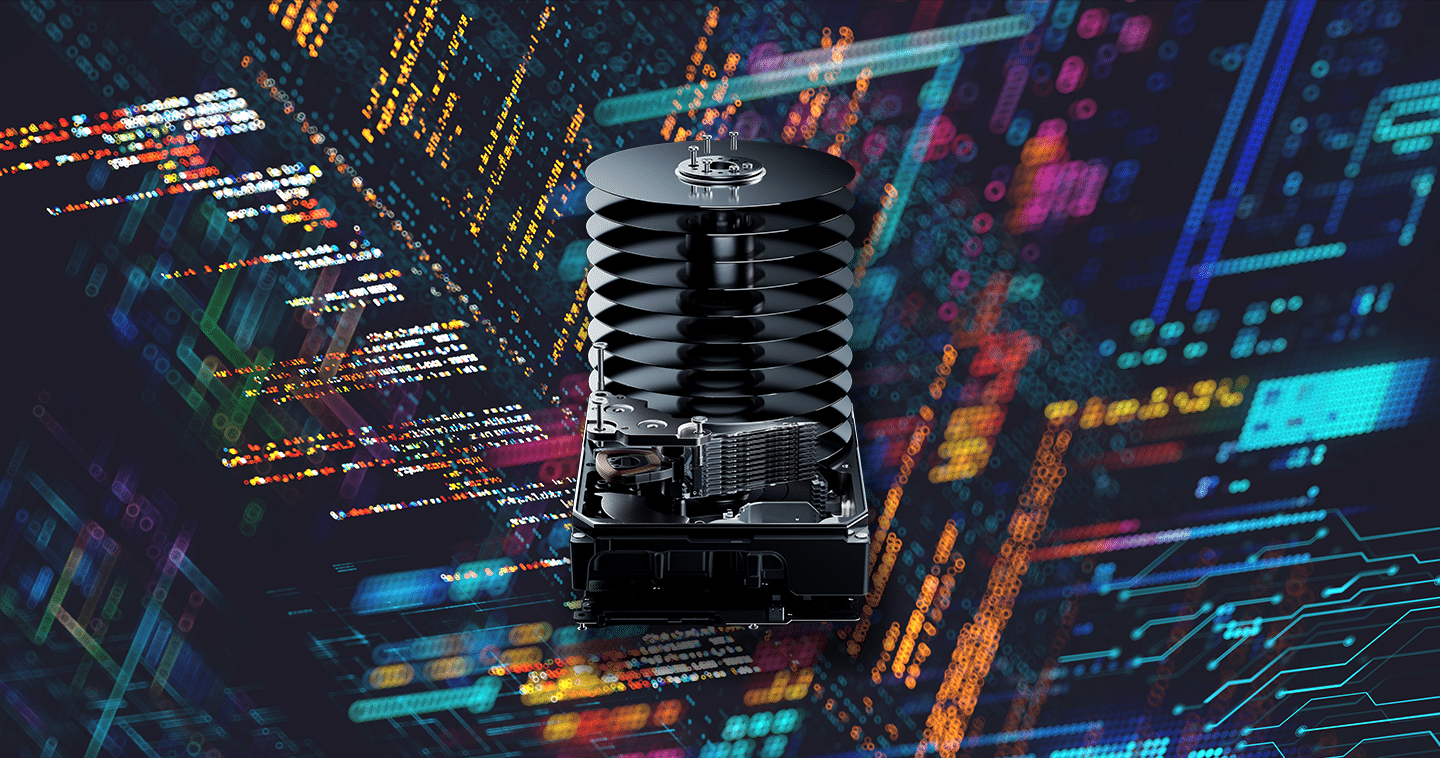

Can you tell us more about the storage demands for AI? It’s not quite as simple as NVMe SSDs being a universal answer, is it?

Fundamentally, it’s about understanding the workload characteristics of the model. NVMe SSDs play a pivotal role because, right now, that’s the fastest way of delivering data to a GPU, unless you’re using volatile memory. The cost of memory is significantly high and tends to be seen only in niche use cases.

But not all SSDs are architected the same. There are tradeoffs in capacity, endurance, and performance. So we need to match capacity, throughput, and IO requirements to the job. Different drives become useful at that point because we can tier accordingly. Each stage of the ML lifecycle and its data storage requirement can benefit from different types of drive architecture—SAS vs. NVMe, HDDs, and SSDs. It’s not a one size fits all. Western Digital recognized that with its AI Data Cycle and the storage framework to support it.

Have NVMe over Fabrics become essential in modern data centers? And has AI simplified or complicated the choice between the multitude of fabric technologies and protocols?

I feel bad calling Fibre Channel niche because it was prevalent for so long, but the reality is that its adoption is waning and its roadmap isn’t well defined. InfiniBand was always the realm of the HPC environment. Neither can be considered routing protocols, and with AI we need the flexibility to move data easily in data centers, without loss, while retaining throughput. So we have Ethernet, a network architecture that is well established, highly performant, standardized, scalable, and with a strong roadmap. In my eyes, Ethernet fundamentally is the future of moving data in data centers. It’s here to stay.

So now it’s about how you augment it. With NVMe, we get a very high-performance, very low-latency protocol that best complements flash. Now, if we use NVMe over Fabrics (NVMe-oF) using RoCE v2 (RDMA over Converged Ethernet), then we’re extending that protocol across the network, and this allows us to pool storage resources by adopting a disaggregated storage model. This is how we architect our AI and ML solutions using Western Digital’s OpenFlex Data24 JBOF as an example.

TCP in NVMe over Fabrics is also an option. It is, arguably, a lot simpler to implement and offers more flexibility. But right now, it doesn’t necessarily provide that low latency and high throughput that we require. So you can think of NVMe over Fabrics with RoCE v2 as the upper tier of performance in a well-managed fabric. Fundamentally it’s, in my mind, absolutely the protocol of choice.

Let’s talk about one of the big AI conundrums: power. Computing complex AI models requires power-hungry systems like never before. How can the industry grapple with this?

Scale is the challenge—for data centers themselves, support utilities, and even grid infrastructure. Hyperscale companies are looking for gigawatt-scale data centers to support AI and machine learning; I have read up to 10 gigawatts in some instances.

This level of scale has to drive innovation in their design and use. Perhaps we will see the next generation of data centers designed around renewable energy with the inclusion of energy storage. Diesel is not the answer. We also discussed liquid cooling. While implementing liquid cooling can be twice as expensive as air cooling, it offers a lot more efficiencies and there’s payback to that. Then there is a fundamental reconsideration of data center architecture layouts and, ironically, using AI to manage power in terms of spinning down devices, advanced power management, voltage frequency, and power gating, etc.

Lastly, is a reconsideration of how we build AI models to be more efficient, and this is already happening. Quality data is not cheap, but it makes a difference, and there is also room for improvement on how some models learn. While it is a separate discussion altogether, it will be interesting to see how many orders of magnitude remain in scaling large models. In the past, there has been a tandem progression of data set sizes, model sizes, and compute. One of these will become a bottleneck and will force workarounds and efficiencies.

Before we end, what are your thoughts about cloud vs. on-premises? How are companies evaluating these options?

I recall a webinar which discussed the following: a conservative price for a single high-end GPU in the cloud is about $30 an hour. If you aggregate those costs for, let’s say a 70 billion parameter large language model, that requires 128 GPUs at more than $11,000 a day. Which in turn, over the 24 days of training required, equates to about $276,000. That’s fairly close to how much it would cost you to buy a high-end GPU server, which makes on-prem a consideration.

Now, most businesses do not have a 70 billion parameter model, even if some in the industry are well into the trillion parameter models. But at the same time, most businesses also don’t have just one model to train. So there’s a lot of factors you have to consider when finding the right cost model.

Costs aside, there is also security to consider. There are players who would like nothing more than to access a model’s weights and algorithmic construct. Industrial espionage is hardly a new phenomenon. Companies need to closely evaluate cloud AI labs to get a true understanding of security frameworks and their vulnerabilities. The same goes for on-premises.

Credits

Editor and co-author Ronni Shendar Research and co-author Thomas Ebrahimi Art direction and design Natalia Pambid Illustration Cat Tervo Design Rachel Garcera Content design Kirby Stuart Social media manager Ef Rodriguez Copywriting Miriam Lapis Copywriting Michelle Luong Copywriting Marisa Miller Editor-in-Chief Owen Lystrup