Last week I was at Microsoft Ignite 2015, held between May 4th – May 7thin Chicago. It was a wonderful experience seeing over 20,000 people on the show floor with many great breakout sessions alongside a multitude of enterprises, startups and SMB showcasing their products and solutions.

Microsoft is a giant corporation and they have products and solutions in just about every technology space. Their business solutions are encompassing, starting from operating systems, to databases, hardware devices, infrastructure platform and different applications to solve business needs. It’s one of the largest eco-systems in the information technology space.

I was very keen to learn and see Microsoft’s roadmap towards Software-Defined Storage (SDS) in the Azure cloud and the products and solutions that are in the making. I wanted to take a moment to talk about some of my takeaways and learning on Microsoft’s SDS platform and the role of flash. I’d summarize these in three key points:

- Storage architectures are changing – companies of all sizes are deploying cloud and cloud-like environments to achieve better efficiencies and flexibility.

- Cloud and software-defined storage architectures depend on a flash solutions

- Flash is the Future: more and more solutions are being architected for all-flash, and taking advantage of the ability to use different types of flash devices to deliver performance and/or capacity benefits. Unlike HDDs, flash comes in many different form factors and different capabilities, and SDS is able to capitalize on those benefits.

Microsoft Cloud Platform System

In the Microsoft Cloud Platform booth, they demonstrated their current generation cloud platform called “Cloud Platform System” (CPS). This is not the place to do a deep dive on the components involved in this system, but I would still like to provide some insight towards how Software-Defined Storage (SDS) is enabling this cloud platform.

At a very high level, the definition of SDS could be a system (or a set of systems) that provide shared storage which can scale up, out or down as needed by using storage devices connected to server hardware. Such solutions provide some sort of software stack which enables data services such as high availability, fault tolerance, snapshot, cloning etc… as is available in traditional SAN storage arrays.

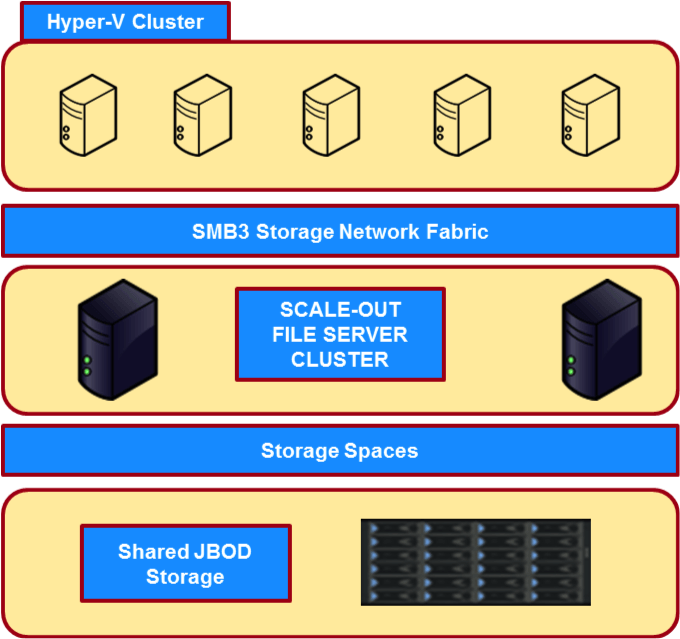

The high-level architecture of Microsoft Cloud Platform can be seen in the image below, as appeared in one of the sessions I attended during Ignite:

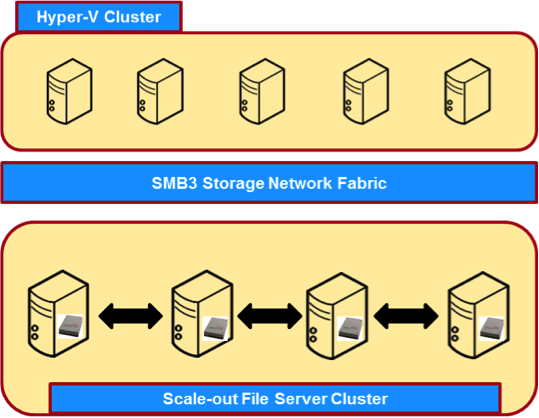

If you look at the diagram, Hyper-V clusters are providing the compute node functionality to the cloud platform in a scale-out form, which is the foundation of this software-defined storage. Similarly, the Scale Out File Server (SOFS) cluster running on the storage servers act as a scale-out storage node by connecting them to Just a Bunch of Disks (JBOD) as shared storage.

The above CPS is a hybrid tiered storage, where a certain amount of the total storage is 12G SAS flash SSDs. This flash layer manages the hot data (data frequently accessed) so that it can be accessed faster, while the remaining, traditional magnetic media (HDD) contributes towards capacity.

Although this is not an all-flash solution, the success of this deployment depends on the right sizing of the hot data layer. If the application has a lot of data to be accessed frequently and an ample amount of SSD space is not available, the access will then happen at the HDD layer, lowering application performance.

Microsoft’s Roadmap to Software-Defined Storage Technology

Before I share more of my thoughts, I would like to mention that the roadmap was presented during the Ignite breakout session presentation. Keep in mind that at any point in time, Microsoft can change their strategy.

Why the present model of storage is changing

In a simple way, there are complexities involved in configuring shared Just a Bunch of Disks (JBOD) as you scale out. As you connect more and more storage servers, it becomes challenging to share a JBOD among all the nodes and at one point of time it may reach a limit.

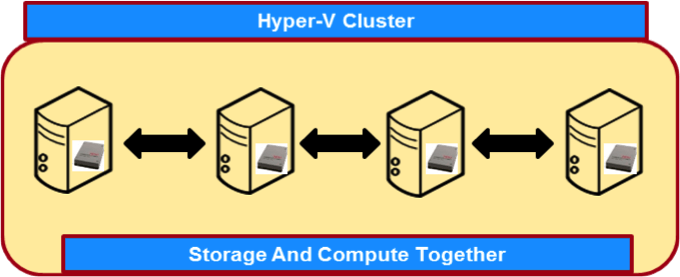

The architecture in the diagram below shows how they will overcome this challenge:

Hyper-Converged – Small and Medium Size Deployment

Here the shared JBOD is replaced by bringing in the storage to servers’ storage bays and providing the shared storage functionality across all nodes. Here we get rid of the complexities involved in current deployment and provide flexibility to scale more easily. If you need more storage and compute to your cloud platform, you simply add more nodes to the existing deployment.

The only limitation in this deployment is that if you need to scale compute or storage independently, it is not possible. However, as this is targeted towards SMB deployment, it is highly unlikely that only compute or storage will need to be scaled individually.

Converged – Large Size Deployment

The converged deployment that appears in the diagram above, addresses the limitation of scaling computer and storage independently. Here you can either scale either storage or compute as the need be, as well as deploy much larger cluster sizes for the cloud platform.

Flash: the Enabler of Software-Defined Storage Technology

Both of the above deployments are “All-Flash” tiered storage. Microsoft is adopting all-flash architectures using high-endurance flash in the hot data tier and low-endurance flash storage in the cold data or capacity tier. As enterprises adopt cloud environments and deploy their cloud-aware applications, it’s becoming increasingly clear that the underlying storage platform has to be flash due to its inherent performance and consolidation advantages.

SanDisk® has the largest product portfolio of flash solutions that deliver varying capacity needs, endurance levels and a multitude of storage interfaces. This helps us provide our customers with the right devices for Software-Defined Storage architectures in both capacity and performance tiers so they can take advantage of these new architectures.

Conclusion

The tiered storage approach is a fundamental concept for SDS and cloud storage, employing low endurance devices for capacity and high endurance devices for hot data or cache.

If we carefully observe the changes in the industry, the future of storage is all about server-attached, local flash memory storage and that is the mantra of cloud deployment.

What’s your experience with software-defined storage? Let me know in the comments below, or If you have any questions, you can reach me at biswapati.bhattacharjee@sandiskoneblog.wpengine.com