Automotive at the Edge: Machine Learning to Help Self-Driving Vehicles See Better

Innovators in the evolving automotive ecosystem converged at the recent Autotech Council meeting, hosted by Western Digital, to share their visions for a self-driving future. What their prototypes and solutions for autonomous vehicles had in common was a shift toward processing at the edge and the use of artificial intelligence (AI) and machine learning to enable an autonomous future.

Read on to hear from automotive innovator Algolux, who is developing a software platform that uses machine learning to help cameras on autonomous vehicles see and perceive better.

There’s something missing from autonomous vehicles as they drive themselves down the road: a clear line of sight.

It’s one scenario to be the only car driving on a highway in the desert in broad daylight. But, the same can’t be said when a self-driving car operates on busy, city streets. Just think about your own driving experiences downtown in a big city. There might have been poorly maintained roads or traffic redirected due to construction. Not to mention, there’s the potential risk of pedestrians crossing the street.

Dealing with Messy Roads in Real Life

In the real-world, road conditions are rarely ideal and usually messy. For drivers, quick decisions are just a way of life on the road. If the goal is to have autonomous vehicles think like human drivers, then the vehicles need to see and react to everything in their path. As Dave Tokic, VP Marketing & Strategic Partnerships at Algolux, says, we need autonomous cars to have autonomous vision.

“It’s really about perceiving much more effectively and robustly the surrounding environment and the objects, as well enabling cameras to see more clearly.” – Dave Tokic

Autonomous vision is based on the idea that self-driving cars can learn to better perceive their surroundings in all types of driving conditions. There could be issues such as bad weather, low light, and dirty camera lenses. Algolux, a startup headquartered in Canada that recently announced a $10M USD Series A funding, is developing a software platform that uses machine learning to help cameras on autonomous vehicles see and perceive better.

By using input from the imaging system of an autonomous vehicle—whether from cameras, LIDAR, or another source—their software processes these inputs to understand the scene in those difficult, real-world scenarios. It’s a novel approach that could help save human lives and put fully autonomous vehicles one step closer to becoming a reality.

Making Sense of Data from Self-Driving Vehicles

Autonomous vehicle developers are taking different approaches to their imaging systems. Some are betting on LIDAR, a system that uses light from a laser to detect objects. Others are using off-the-shelf cameras that have been specially tuned for driving. Each technology has its tradeoffs. LIDAR can detect objects further out than cameras, but is much more expensive. Regardless of the chosen technology, the images still have to be processed, integrated, and fused to inform driving decisions.

“We actually ‘learn’ how to process for the particular task, such as seeing a pedestrian or bicyclist… It gives us quite the advantage of being able to see more than could be perceived before.” – Dave Tokic

Autonomous vehicles are learning to see more than ever before. Now, the challenge remains to make the ecosystem of self-driving cars safer and more reliable than ever before.

The Future of Self-Driving Cars is All About Data

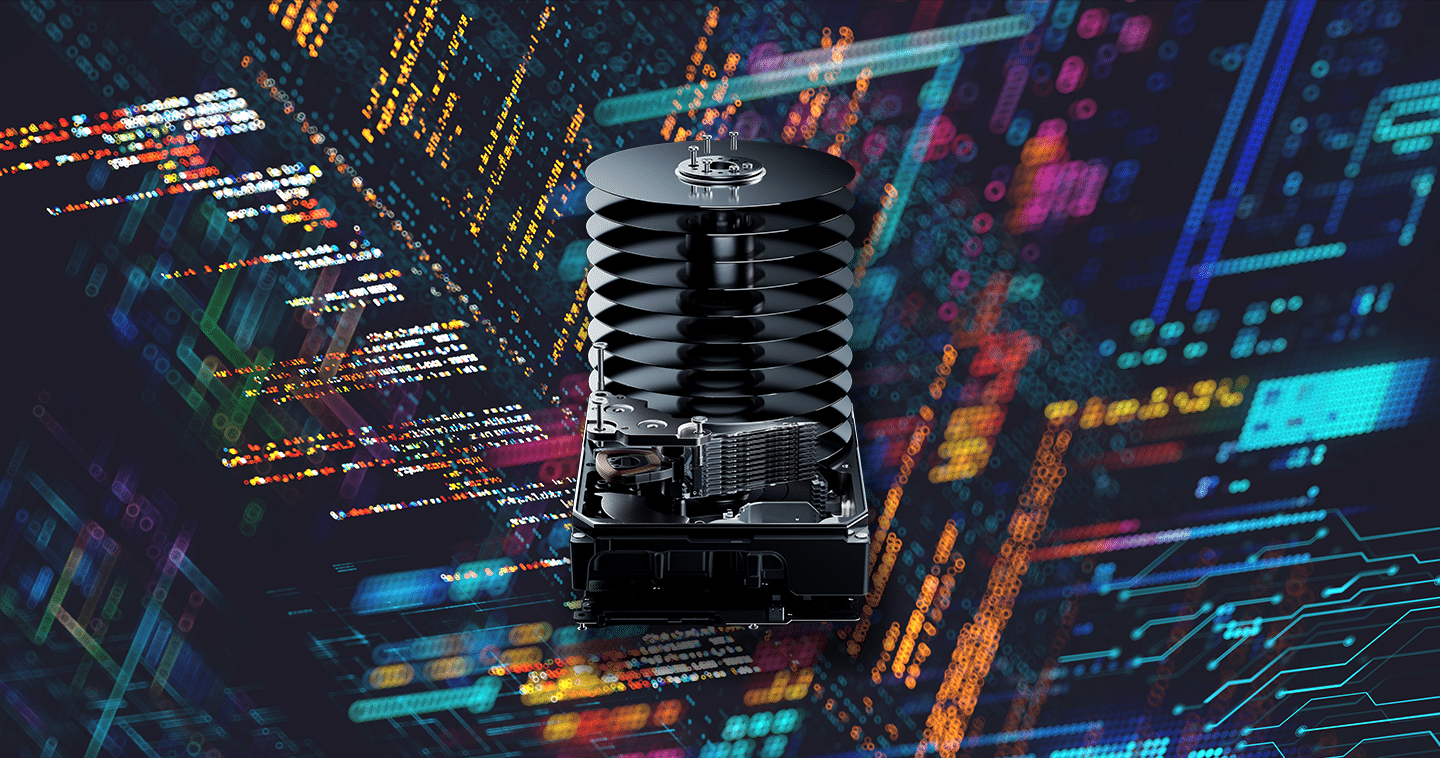

As the automotive industry transforms, data is without a doubt at the heart of the new smart edge devices that are revolutionizing the way we travel. Even before self-driving cars flood our roadways, today’s new smart applications built into cars mean there is more data to store and process than ever before. It is expected that a single autonomous car will have beyond 1 TB of storage by 2022*. Data that travels back and forth between vehicles, across the network infrastructure at the edge, and in the cloud. Big data, fast data, data at the edge and in the cloud. The future of connected and autonomous cars is all about data.

As we move toward the autonomous vehicles of the future, data will be driving the evolution of transportation. The right storage environment will enable this future with edge to cloud solutions.

Western Digital is committed to the automotive market. Data is evolving and growing at an insatiable rate driven by technologies such as IoT, ADAS, cutting-edge infotainment and safety systems, and other connected technologies. A new approach to data storage is required to deliver speed, agility and longevity for various applications, workloads and outcomes that can make it economical to make data alive at scale. We’re driving the innovation across every layer of the infrastructure necessary to stay ahead of new demands. Our breadth of expertise and level of integration give us an unmatched ability to deliver carefully calibrated solutions for every type and use of data.

Learn More About Data in Automotive

- For a recap of the recent Autotech Council meeting hosted by Western Digital, read Garima Mathur’s blog.

- Hear the latest trends from Automotive industry events

- Automotive Q&A with Martin Booth

*Based on internal estimates

Forward-Looking Statements

Certain blog and other posts on this website may contain forward-looking statements, including statements relating to expectations for our product portfolio, the market for our products, product development efforts, and the capacities, capabilities and applications of our products. These forward-looking statements are subject to risks and uncertainties that could cause actual results to differ materially from those expressed in the forward-looking statements, including development challenges or delays, supply chain and logistics issues, changes in markets, demand, global economic conditions and other risks and uncertainties listed in Western Digital Corporation’s most recent quarterly and annual reports filed with the Securities and Exchange Commission, to which your attention is directed. Readers are cautioned not to place undue reliance on these forward-looking statements and we undertake no obligation to update these forward-looking statements to reflect subsequent events or circumstances.