A Balancing Act: HDDs and SSDs in Modern Data Centers

It’s true that flash storage is getting faster and more affordable, but hard drives (HDDs) continue to play a major role in the enterprise. Nowhere is this more apparent than in today’s data centers where the amount of data created continues to grow exponentially, fueled further by the AI Data Cycle. While consumers tend to […]

July 25, 2024 • 6 min read Data

Jodi Washington: Creator of Awesome Experiences

It’s all about the experience. Jodi Washington knows this. She embodies this sentiment whether at work or at play. When a colleague emails her, they are met immediately with a cheeky reply: “Email is so 2020,” leading to a live link inviting the sender to “Hit me up in Teams to find me in a […]

July 16, 2024 • 6 min read People

Game Day with Sports Photographer Terrell Lloyd

Terrell Lloyd, director of photography for the San Francisco 49ers American football team, knows how to capture data—to the tune of 15,000-20,000 images per game day. He’s now in his 27th season shooting photos of the NFL team. And while photography was not his first career, it was always his passion. When Lloyd had a […]

July 9, 2024 • 7 min read People

10 Questions with a Director of Operations

Cat Pham is the director of operations for consumer solutions in Western Digital’s Flash Business Unit. She’s a well-traveled Bay Area native who enjoys cooking different ethnic foods and volunteering her time at church and with a nonprofit organization that services homeless youth in Silicon Valley. Her 3-year-old daughter also keeps her on her toes […]

July 2, 2024 • 2 min read People

Neil Robertson: Finding the Right Mix for Innovation

Neil Robertson is always on the lookout for the next big thing. As vice president of physical science research at Western Digital, Robertson is responsible for uncovering breakthrough technologies and bringing innovative ideas to fruition. Robertson manages teams of chemical, electrical, materials science, physics, and mechanical engineers who together investigate potential future data storage technologies. […]

June 25, 2024 • 6 min read People

What’s Your Create: Kyler Nathan IV

Everything begins with a story for Kyler Nathan IV. After the recording starts, he settles in, comfortable and composed. Nathan’s a true renaissance man, writing poetry, producing podcasts, educating students, and building communities. No matter what he’s focused on, it always comes back to stories. “When I look back at college, or any time in […]

June 18, 2024 • 6 min read People

Charting a More Inclusive Natural World

Wading into uncharted territory requires the tenacity to not only take that first step but to also self-advocate. Marine biologist Dr. Ruth Gates, evolutionary biologist Dr. Joan Roughgarden, and geologist Dr. Clyde Wahrhaftig are three LGBTQ+ scientists we’re celebrating this Pride Month who did just that, encouraging further research and representation in the natural sciences. […]

June 13, 2024 • 5 min read People

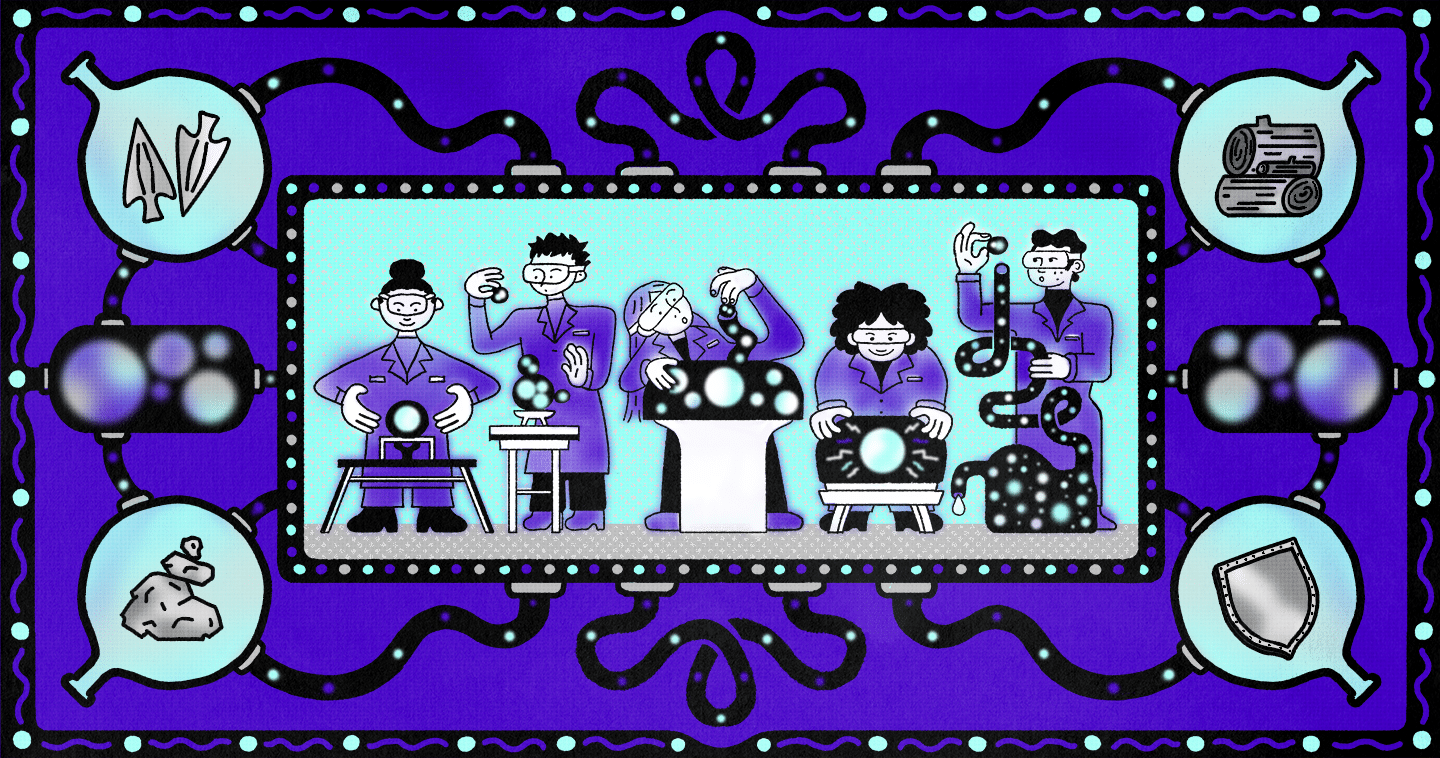

How Future Materials Will Define What’s Next

Human history has been defined by materials—the Stone Age, the Bronze Age, and the Iron Age. Materials serve as a measure of our progress, and new materials usher in transformative change. To explore what’s next, Western Digital talked with leading scientists and technologists about the possibilities of Future Materials. We live in an age where […]

June 11, 2024 • 18 min read Future Materials

Dr. Carlijn Mulder: A Leading Scientist’s Take on Magnets

Dr. Carlijn Mulder is the Dutch scientist leading Niron Magnetics’ Magnet R&D department. She is taking the company’s breakthrough material out of the lab and into the world, hoping to reconcile technological progress with environmental sustainability.

June 7, 2024 • 8 min read Future Materials

Bianca Cefalo: Why the Future of Materials Could Be in Space

A thermo-fluid-dynamic engineer on the 2013 NASA/JPL Insight Mars mission, and former Airbus Defense and Space thermal product manager, Bianca Cefalo is the CEO and co-founder of the startup Space DOTS, revolutionizing materials testing in space.

May 30, 2024 • 8 min read Future Materials