Feeding the GPU Beast – How to Leverage NVMe™ for AI & ML Workloads

Following the launch of the new Ultrastar Serv24-4N, our four-node storage server that delivers extremely fast, dense and efficient NVMe storage, we asked our partners at Excelero to share their insights into how to best leverage NVMe for AI & ML workloads.

By Tom Leyden, VP of Marketing at Excelero

In today’s digitized world, industry leaders are more effective in every aspect of data analytics; they run state-of-the-art analytics applications powered by GPU’s and high-performance storage infrastructures to capture, store and analyze more data faster and make better business decisions.

AI and ML use cases have exploded over the past few years, enabled by four major technology evolutions:

1. NEW SENSOR AND IOT TECHNOLOGIES have proliferated that capture everything from images to temperature, heartrate, and more – adding even more data volumes.

2. NEW NETWORKING TECHNOLOGIES enable high bandwidth data transfers, low latency, high IOPs, and smart offloads.

3. THE RISE OF POWERFUL GPU TECHNOLOGIES that lower the cost of compute on massive data sets have made parallel processing faster and much more powerful.

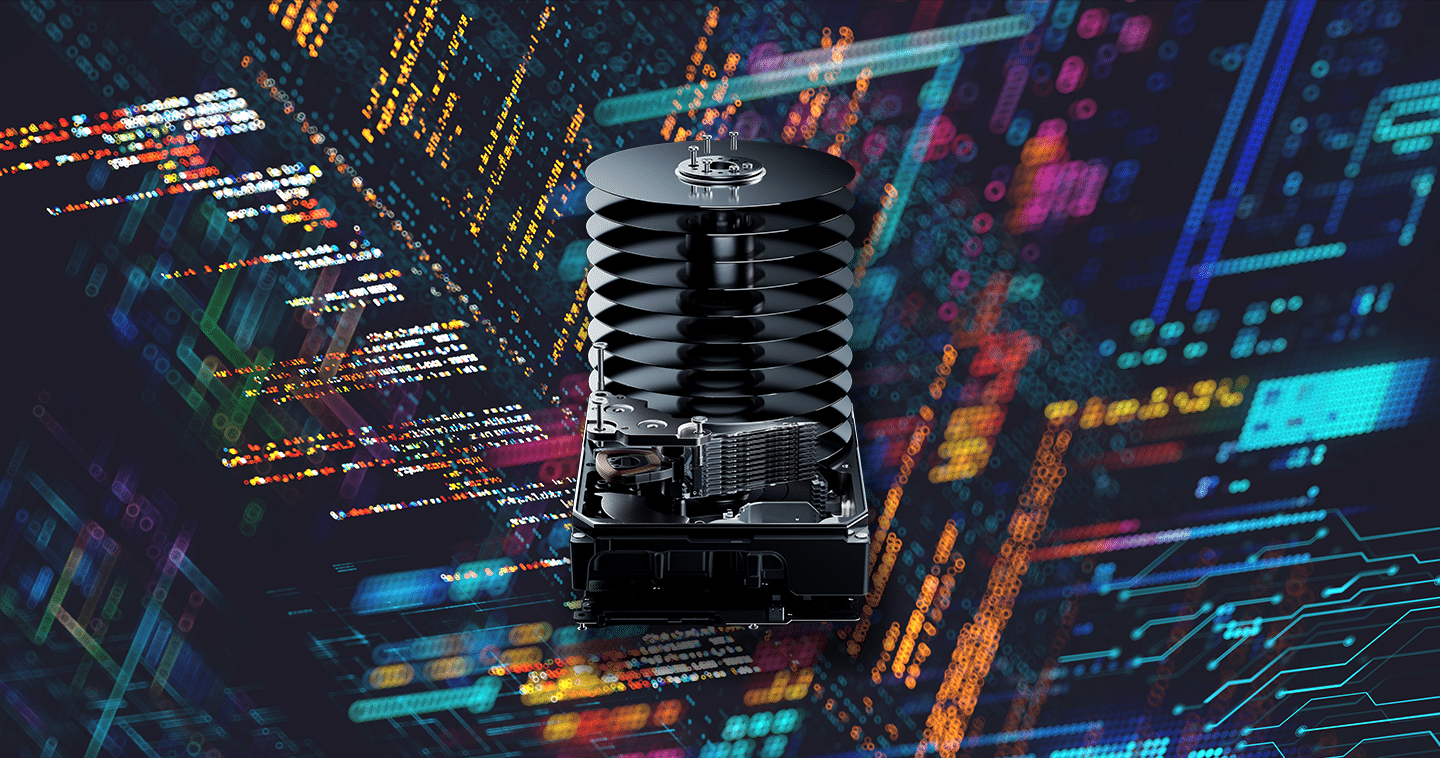

4. NEXT-GENERATION STORAGE INTERFACES AND PROTOCOLS SUCH AS NVME flash bring new performance and architectural features that are well-suited to these new computational engines.

Feeding the GPU Beast

The biggest advantage of modern GPU computing is also creating its biggest challenge: GPUs have an amazing appetite for data. Current GPUs can process up to 16GB of data per second. NVIDIA’s latest DGX-2™ system has as many as 16 GPUs, but only has 30TB (8 x 3.84TB) local NVMe and is not optimized to use it efficiently. Other brand GPU servers typically feature few PCIe lanes for local flash (NVMe or other), meaning even the lowest latency option for these servers is a severe bottleneck or is simply too little capacity for the GPUs. Starving the GPUs with slow storage, or wasting time copying data, wastes expensive GPU resources and affects the overall ROI of AI and ML use cases.

Leveraging NVMe for AI & ML

NVMe flash offers great benefits for specific AI use cases like training a machine learning model. Machine learning involves two phases – training a model based on what is learned from the dataset, and running the model. Training of a model is the most resource hungry stage.

The modern datasets used for model training can be huge – for example, MRI scans can reach terabytes each; a learning system may use tens or hundreds of thousands of images. Even if the training itself runs from RAM, the memory should be fed from non-volatile storage, which has to support very high bandwidth. In addition, paging out the old training data and bringing in new data should be done as fast as possible to keep the GPUs from being idle. This necessitates low latency, and the only protocol allowing for both high bandwidth and low latency like this is NVMe.

However, there are limitations as to how many local NVMe drives can be used. This is often the result of how many PCIe lanes are actually allocated to NVMe drives since the GPUs/SoCs also require PCIe lanes. Additionally, for checkpoint usage many local NVMe drives need to be synchronized to allow for a usable snapshot.

Learn how to architect low latency with NVMe at petabyte scale for AI & ML workloads

NVMesh for AI & ML

Fortunately, GPU servers also have massive network connectivity. They can ingest as much as 48GB/s of bandwidth via 4-8 x 100Gb ports – playing a key part in one of the ways to solve this challenge.

Excelero’s NVMesh is a Software-Defined Block Storage solution that features Elastic NVMe, a distributed block layer that allows AI & ML workloads to utilize pooled NVMe storage devices across a network at local speeds and latencies. NVMe storage resources are pooled with the ability to create arbitrary, dynamic block volumes that can be utilized by any host running the NVMesh block client.

What this means is that customers can maximize the utilization of their GPUs leveraging the massive network connectivity and the low-latency and high IOPs/BW benefits of NVMe in a distributed and linearly scalable architecture.

A distributed block layer that allows AI & ML workloads to utilize pooled NVMe storage devices across a network at local speeds and latencies

We built NVMesh to give customers maximum flexibility in designing storage infrastructures. Being a 100% software-defined solution, NVMesh supports any Western Digital NVMe solution including the new Ultrastar Serv24-4N, a performance-optimized platform for Software Defined Storage (SDS.)

Chipset, core count, and power can be customized to match varying data workload and performance requirements. Combined with the Ultrastar Serv24-4N, NVMesh eliminates any compromise between performance and practicality, and allows GPU optimized servers to access scalable, high performance NVMe flash storage pools as if they were local flash. This technique ensures efficient use of both the GPUs themselves and the associated NVMe flash. The end result is higher ROI, easier workflow management and faster time to results.

Upcoming Webinar

We’re excited to take a deeper dive into how to architect low latency with NVMe at petabyte scale for AI & ML workloads in an upcoming webinar. Join experts from Western Digital and Excelero and learn how to:

• Architect a scale-out NVMe architecture

• Deliver microsecond latency at PB scale

• Deliver TB/s in performance to feed the hungry GPUs

Save your seat or stream here.

—

Tom Leyden is the vice president of corporate marketing at Excelero. He is an accomplished marketing executive who has excelled at creating thought leadership and driving growth for half a dozen successful storage and cloud computing ventures. Over the past three years Tom has been educating the market on the benefits and opportunities of distributed NVMe storage for high-performance workloads. Prior to that Tom was an avid evangelist of object storage and cloud computing technologies. Tom’s track record of technology startups includes data deduplication pioneer Datacenter Technologies (acquired by Symantec), early cloud innovator Q-layer (Sun/Oracle), Amplidata (HGST) and Scality.