How High-Performance Computing (HPC) Powers the Digital World

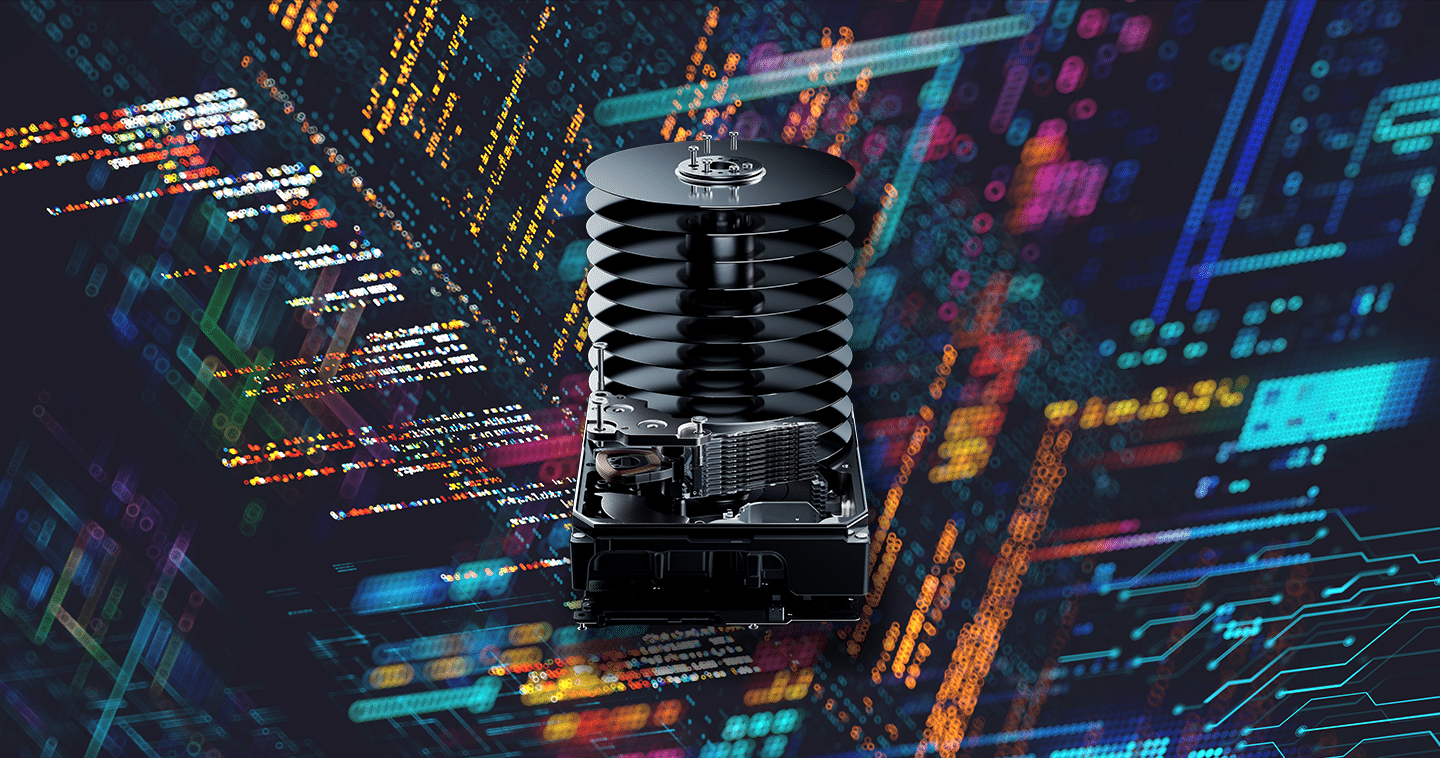

Walls lined with imposing obelisks, thrumming with energy. Spinning disks and whirring tapes, writing and rewriting the results of countless calculations. Tiny green lights, signaling and flickering amid a snarling nest of cables. While they’ve come a long way since, these were the hallmarks of the earliest supercomputers, dating back to the 1960s.

Today, supercomputers and similar high-performance computing (HPC) systems are vital pieces of information era infrastructure. Yes, they still perform the kinds of high-end, complex calculations that sent astronauts to the moon, but they process bank transactions and render frames of animation too. Everything from web searches to social media posts filter through the job queue of a supercomputer somewhere.

What Is High-Performance Computing (HPC)?

High-performance computing is a somewhat obfuscating term that refers to the aggregation of computing resources to accomplish intricate, complicated tasks more quickly. When built correctly, the power of these supercomputers and computer clusters prevails a single workstation by many orders of magnitude.

“HPC allows us to present resources to researchers that they simply don’t have access to on a single desktop,” said Chris Sullivan, director of research and academic computing at Oregon State University’s College of Earth, Oceanic, and Atmospheric Sciences. While individual computers have accumulated impressive computing power and storage capacity over the years, nothing quite beats this aggregated power, leveraging a flotilla of processors and storage devices to churn through calculations.

“These systems are scaled up and built specifically to run extremely demanding applications at high speed with low latency, which requires many different components working together in concert,” said Volodymyr Kindratenko, director of the Center for AI Innovation at the National Center for Supercomputing Applications.

While the makeup of these systems is simple on the surface—it’s just a bunch of computers, after all—their components still need to meet specifications day after day, year after year.

“These machines run millions of calculations at the same time, and they must be kept in sync. This requires GPUs and CPUs, connected via state-of-the-art interconnects, with access to a lot of storage. The ability to access data quickly, and to transfer it throughout the system, is the key to HPC systems,” Kindratenko said.

As Kindratenko sees it, while they’re expensive and challenging to maintain, these exacting standards and cutting-edge components all feed into the single purpose of HPC.

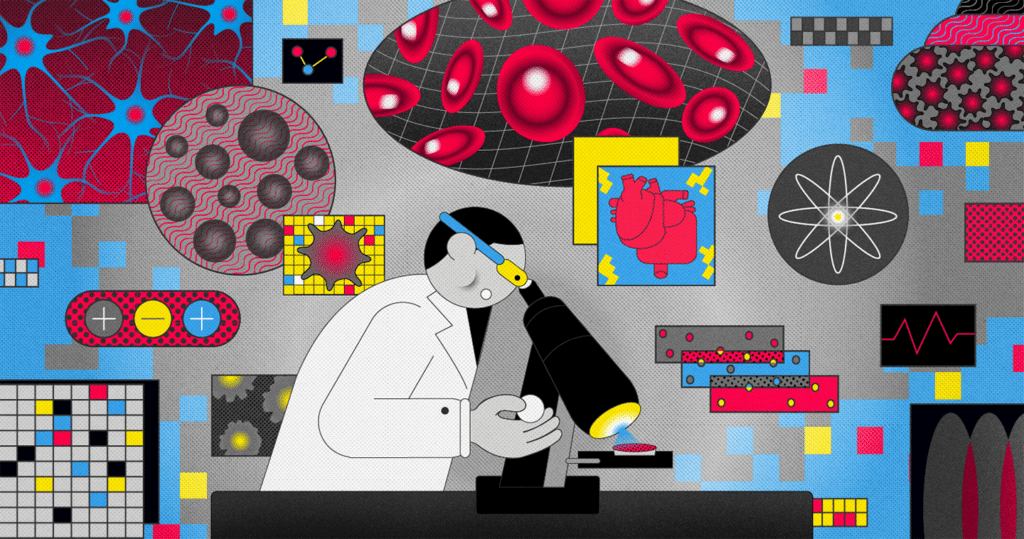

“The sole use of these machines is to solve problems that we cannot solve alone on a single system,” Kindratenko said. “We’re simulating black holes, discovering new materials, and curing diseases. That’s the whole point … solving problems and answering questions bigger than we can comprehend on our own.”

How HPC Systems Work at Scale

While there is still a clear demand for HPC systems that can run these mind-boggling simulations, a mismatch between the computing power needed and the power that’s available has surfaced. On one side, solving bigger problems requires bigger machines. On the other side, the number of programs and researchers that can utilize HPC systems to their fullest are few and far between.

“These machines, for a long time and a long time still, will be niche systems. The larger they are, the fewer the applications can take advantage of them,” Kindratenko said.

On the other hand, this surplus affords more access to computing power across the entire university as resources can be shared among multiple smaller projects running simultaneously on the same system.

“If you look at my project studying the health of our oceans, our devices on the Oregon cost produce 10TB of data in a week. A human would go through that in a year, and by then it’s not useful; I have no idea the rate at which we’re damaging the planet,” Sullivan said.

Scaling these systems amplifies their impact. The system isn’t just crunching the numbers on cancer treatments, it’s also solving a molecular dynamics problem, and simulating virus behavior, all at the same time. More resources have a direct impact on accelerating the rate of discovery in labs all over the world.

“Science is all about rates, in so many ways. We need to be able to process our data in a timely fashion, and that’s why we need HPC,” Sullivan said.

HPC in Real Life: Media, AI, and Research

Outside of scaling research, HPC systems have found their way into a variety of industries that leverage their power in surprising ways.

“There’s just so much HPCs do. They’re everywhere. Every single post on social media, your bank transactions, your web searches—all of that is powered by HPCs,” Sullivan said. In many ways, our digital lives are mediated entirely by HPCs around the world.

“Almost every single thing you do on the internet is a job that gets farmed out to an HPC in some way or another. It’s in the background all the time because your one computer simply couldn’t do it. You can’t put 6 billion people on one computer, but you can spread them out across a million computers. HPC is what enables the internet to exist,” Sullivan said.

Spreading out millions of PCs accessed by billions of people every day is made possible, in part, by shrinking costs and sizes of HPC systems.

“What you’re seeing is that we don’t need gigantic spaces or gigantic systems. We can make them smaller and tie them together. While the construction of these huge supercomputer systems is on the decline, the construction of HPC systems, as a whole, is up,” Sullivan said.

Building small and medium-sized HPCs and tying them together, therefore, is one of the keys to the future of our digital lives; it makes access to computing power more ubiquitous, more resilient, and more logistically sound. Sullivan and his team are currently working to tie together the computing resources of Oregon State’s main campus in Corvallis, Ore., with its satellite campus in Bend, Ore., a proof of concept for the new future of computing.

“We’ve ran into several issues with our current HPC systems: space, power consumption, cooling, and network speeds. These are often expensive and hard to [solve] in cities and can limit access or growth of these resources. But if we can move some of these resources to Eastern Oregon, where we can use sustainable geothermal power and there’s more space, while still offering the same computing power, that’s a game changer,” Sullivan said of the project.

It’s a small start, but this is the future Sullivan envisions: millions of computers, clustered together, linked with high-speed networking, ensuring that everyone who needs it has access to HPC resources.

The Future of HPC: Put It Everywhere

In many ways, HPC’s story reflects the growth of computing as a whole. What was first a niche resource hidden away in university basements boomed into a quintessential yet quotidian part of life. Computing became faster and cheaper, bringing its benefits to businesses and consumers alike. Now, as billions of people live online, with many more on the way, the technology needs to find sustainable ways to become ubiquitous and resilient.

In other words: make it fast, make it small, and put it everywhere.