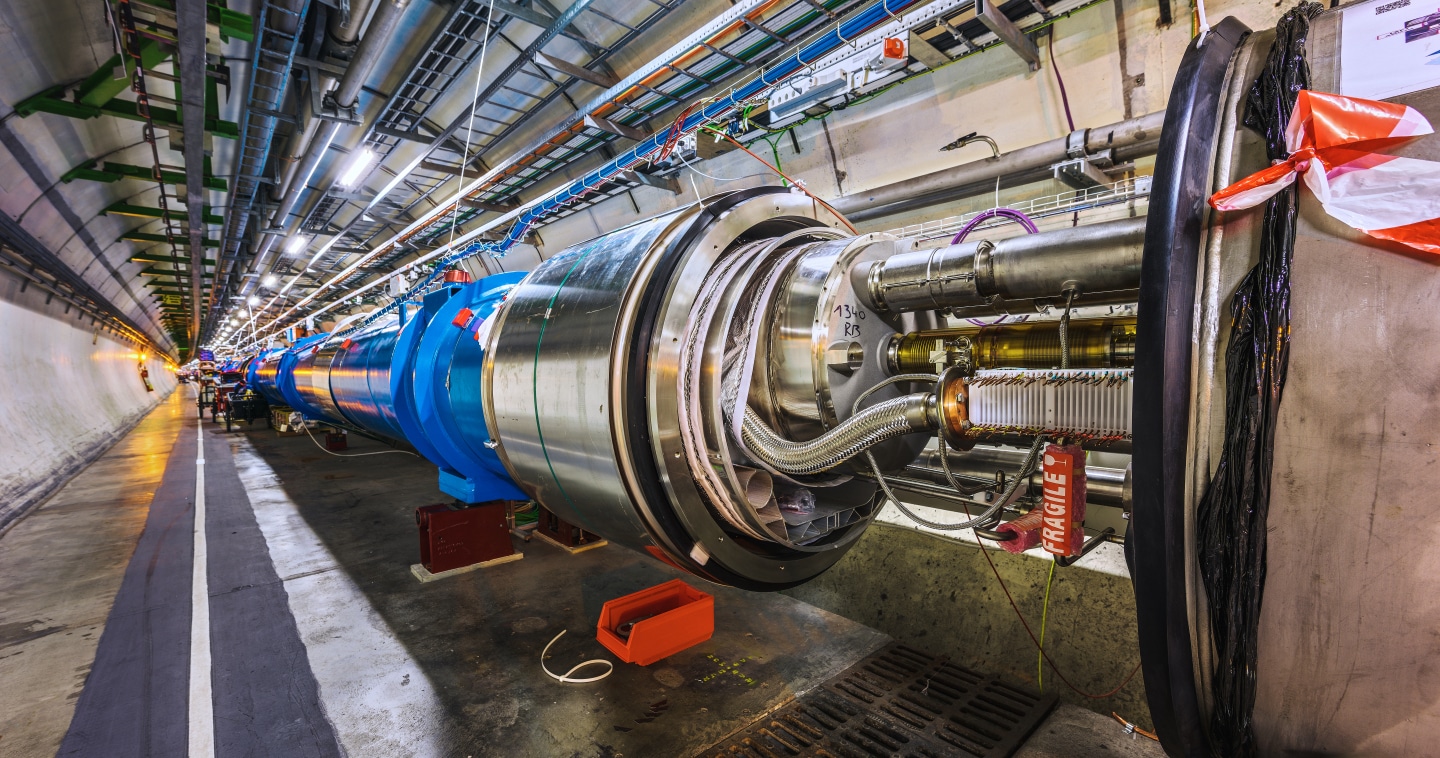

Image of LHC tunnel during LS2n by Brice, M. courtesy of CERN

Tucked beneath the countryside on the outskirts of Geneva lies the world’s largest and most powerful particle-smashing machine: the Large Hadron Collider (LHC).

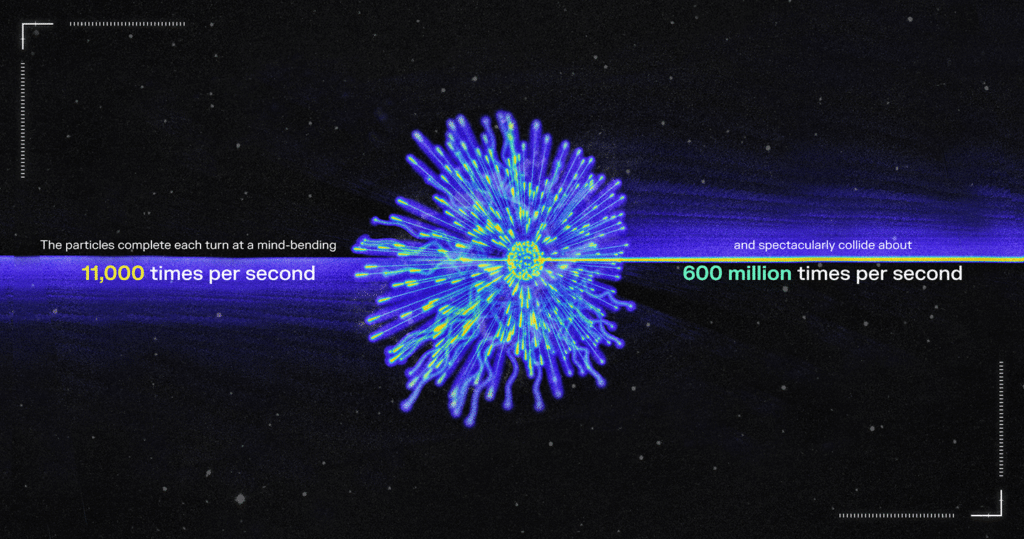

Run by the European Organization for Nuclear Research, the LHC probes the universe’s origins by recreating the conditions that existed just after the Big Bang. Housed in a 17-mile circular underground tunnel, the LHC accelerates protons and ions to near light speed. The particles complete each turn at a mind-bending 11,000 times per second and spectacularly collide about 600 million times per second.

While the total collision energy is far from being enough to birth another universe, the fact that it is concentrated in the minuscule proton beams gives physicists clues into the fundamental constituents of matter and the forces governing our universe.

A petabyte per second

CERN’s computing infrastructure is as ambitious as its scientific endeavors. Subatomic particles are invisible to the naked eye. So, physicists must devise ways to detect, measure, and visualize the trillions of particles shooting out of each collision, some of which exist for a mere septillionth of a second (a decimal point followed by 22 zeroes).

With layer upon layer of complex devices, the LHC detectors generate a staggering petabyte of collision data per second. As Eric Bonfillou, the deputy group leader of CERN’s IT Fabric group, aptly put it, “it’s an amount of data no one can really deal with.”

The computing farms within the LHC sift and filter the data in real time. Only the most essential data get shipped to the primary data center, now home to more than an exabyte.

Bonfillou and the IT Fabric group procure, manage, and operate the layer providing IT equipment for CERN’s experiments and user community, including the data centers and associated computing facilities.

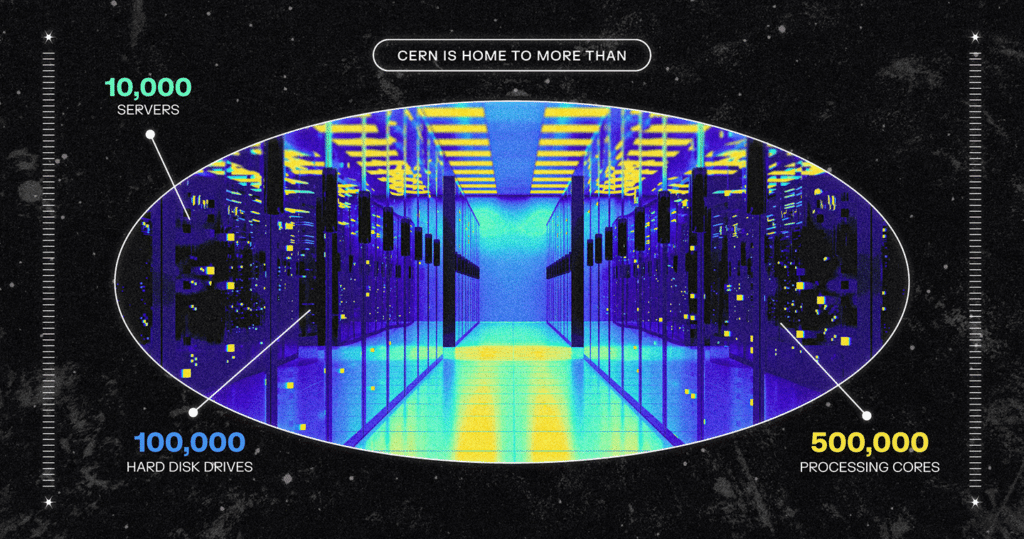

Over the years, Bonfillou has watched CERN’s IT data center evolve from modest PC farms stacked on shelves to the current industry standard rack architecture. The data center is home to more than 10,000 servers, 100,000 hard disk drives, and nearly 500,000 processing cores. It’s one of the most demanding computing environments in the research world.

“The challenge is that we always have to put more resources, storage capacity, and computing power within existing boundaries,” said Bonfillou.

Those constraints are primarily cost and power. As a publicly funded institution supported by 23 member states, one of the most critical metrics is storage efficiency—maximizing capacity for every dollar spent.

Bigger, faster, more energy

The LHC has recently undergone its second major upgrade for a series of experiments known as Run 3. Run 3 can smash particles at an unprecedented collision energy. With much of the particle accelerator chain upgraded, the collider can produce more energy and drive more collisions, allowing the experiments to precisely study infinitesimal particles.

But all that brawny goodness meant Run 3 would collect more data than during the first two runs combined.

To store this data, Bonfillou and his team had to find new solutions. The challenge was not only in aggregating massive capacity but in the ability to push tremendous amounts of data to storage fast enough.

While flash storage would offer a far more performant alternative to hard drives, “The costs for capacity would be up through the roof, and I’d fail at my job,” said Bonfillou.

Yet coalescing the performance of hard drives can come with challenges. While enterprise hard drives (HDDs) are designed to work under the most rigorous data operations, the harder they work, the more heat they generate. When many drives are crammed into a server, temperature thresholds can potentially cause throttling of HDD performance to keep drives from overheating.

In addition, HDD read and write heads position at about the micron level (about 1/90 of the thickness of a human hair). When a few dozen hard drives spin next to one another at maximum speed, they can generate vibrations, potentially affecting the HDD head positioning, which will impact throughput and performance.

A new generation of JBODs

On the search for new solutions, Bonfillou meets regularly with CERN’s various technology partners, such as Western Digital. CERN and Western Digital have had a long-standing partnership, and quarterly meetings allow technologists to discuss current products used by CERN and share developments in Western Digital’s portfolio of hard drive and flash solutions.

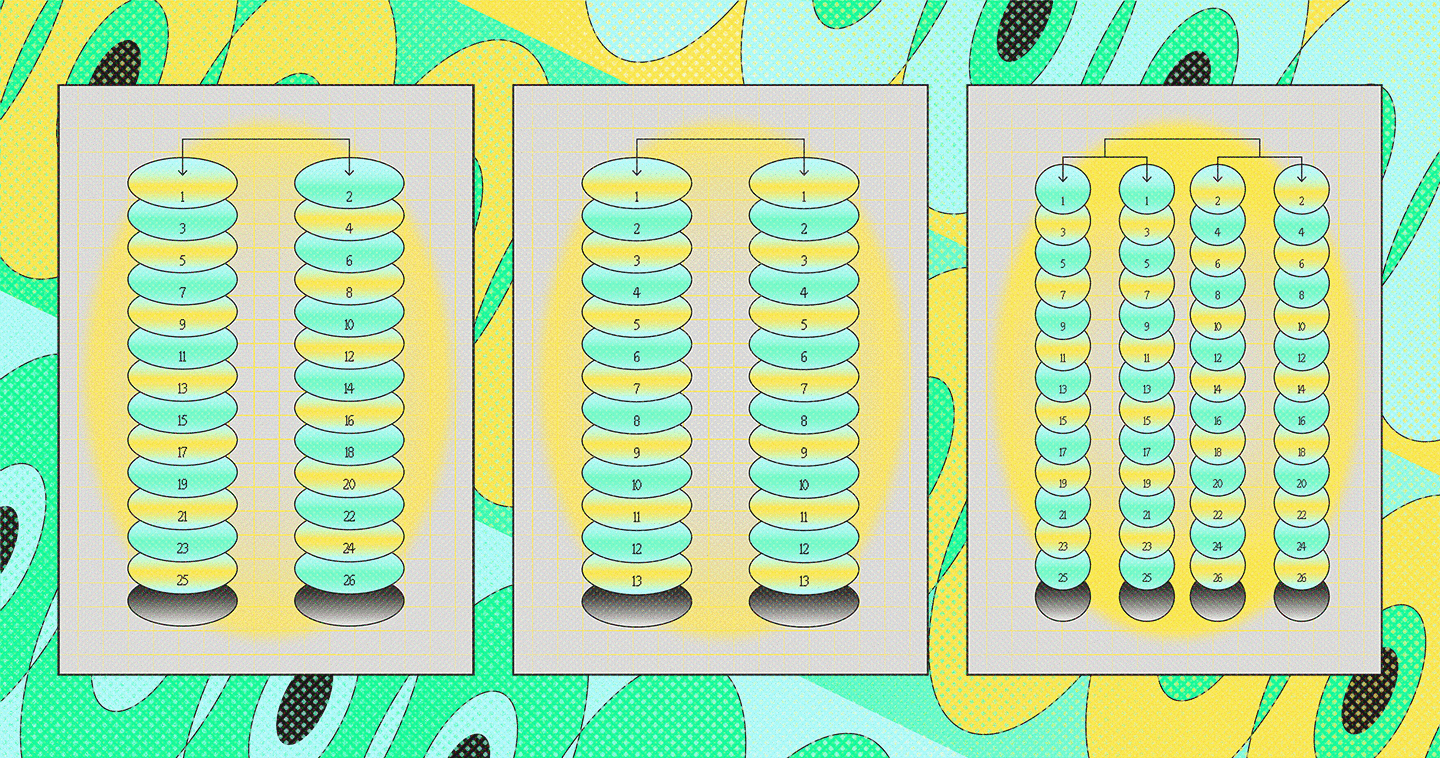

When Bonfillou shared the requirements from the next generation collider, the team suggested testing the company’s new series of JBODs (Just a Bunch of Drives), the Ultrastar hybrid storage platforms.

JBODs are the workhorse of most large data centers (CERN, too, has been using them for more than a decade). The no-frills, rack-sized enclosures are designed to deliver expandable capacity with little complexity, which is why they aren’t an obvious hotbed for tech innovation.

Yet several years ago, Western Digital realized it could use its expertise in HDD and SSD manufacturing to develop uniquely optimized enclosures for its devices and innovate on a data center monolith.

With its Ultrastar storage platforms, the company introduced several technologies to better circulate air within enclosures while reducing rotational vibrations, in some cases by more than 60%. So even when all the drives are working hard, performance can be better maintained. The result is a higher-performing JBOD that also runs cooler and consumes less electricity.

The numbers looked promising for the demands of Run 3, but the JBODs would need to go through CERN’s fantastically rigorous evaluation.

New science and a new data center

After over three years of shutdown, LHC’s Run 3 kicked off in summer 2022. More than 100 tons of superfluid helium were flowing through LHC’s pipes, cooling it to a temperature of -456 Fahrenheit (colder than outer space), and particles began swooshing through one of the world’s most complex scientific instruments.

Bonfillou admits that his excitement for the launch was coated with a dash of nervousness. “At CERN, we are doing something that doesn’t exist anywhere. It’s unique. You never know, you can’t compare, and you can’t guarantee that it’ll all work,” he said.

But everything is running smoothly, confirmed Bonfillou. Western Digital’s JBODs fulfilled every stringent power, performance, capacity, and reliability requirement and are now integrated into CERN’s data center, which has recently exceeded the exabyte threshold. Each box, roughly the size of a coffee table, can pack up to a whopping two petabytes of storage. Together, they deliver many petabytes of capacity while meeting the experiments’ data throughput demands, with some enclosures even used directly at the LHC collision sites.

Bonfillou and his team have little time to celebrate these successes. CERN is finishing the construction of a new data center to extend its computing capabilities for the next run, Run 4, which is expected to start in 2029.

The new data center is located on the French side of the border, gifting Bonfillou additional computing power.

“We are preparing for the foreseen computing needs of Run 4, but it’s not straightforward as there is absolutely nothing available on the market today that would allow to transfer and store data at its estimated figures,” he said.

As scientists continue to seek answers within the LHC’s experiments, they will rely on the capabilities of the CERN Data Centers and their technology partners to innovate tirelessly and push the boundaries of what’s possible on the quest to probe our universe.

—

For a virtual tour of CERN’s data center, visit here.

Check out Western Digital JBODs.

Artwork by Cat Tervo