Virtualization is an area I have always been interested in and like to explore. I have written many blogs that showcase how flash-based solutions (SSDs) can benefit virtualized environment. If you look at these studies, they are largely focused on software-defined storage (SDS) and server attached storage use cases where SSDs can play a critical role in enhancing application performance or SLAs.

Continuing my research, I wanted to explore another software-defined storage (SDS) solution, Microsoft Storage Spaces. This SDS solution from Microsoft has existed for a while and has evolved with each release of Microsoft Operating System.

From my preliminary study I learned that Microsoft Storage Spaces is mostly deployed using MS Scale Out File Server (SOFS) scenario. If you are not familiar with this, I suggest to start reading these articles: “Scale-Out File Server for Application Data Overview” and “Storage Spaces Overview” to understand the different deployment possibilities.

Although a stand-alone Storage Spaces (single windows server with local disks attached) like I used in my testing is hardly seen in actual deployment, the goal of my research is to validate how much flash can benefit this SDS architecture. Though SOFS is more practical, one of the underlying fundamentals of this deployment is Storage Spaces. My belief is the results we achieved can be extrapolated to a larger deployment with a more complex architecture.

Brief Storage Space Explanation

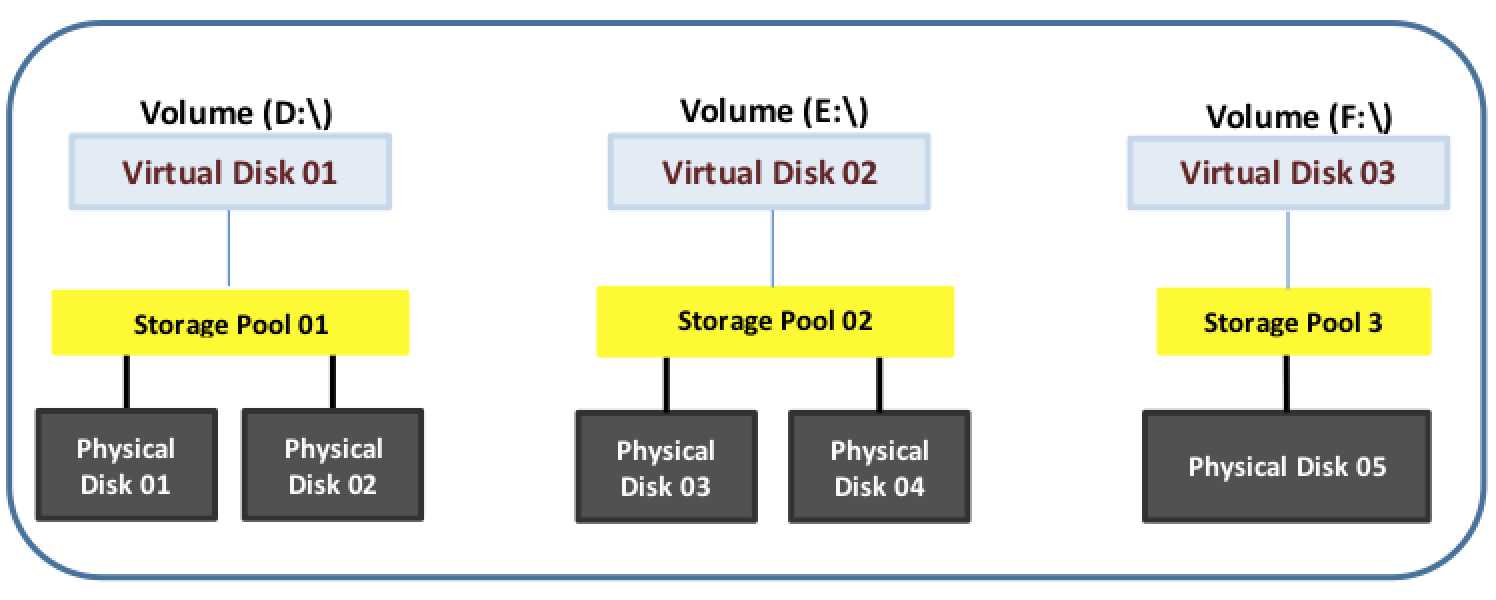

I’ll use the simple diagram below to explain what Storage Spaces looks like:

As you can see, you select a bunch of disks in your physical machine, or in JBOD connected to it, and create a storage pool or disk group. While you create a group you define the availability and performance requirement of that disk group (simple, mirror or parity). This disk group is termed as a “storage pool”. This storage pool is mapped as “Virtual Disk” more like a LUN, which we format with NTFS or ReFS file system to create traditional volumes D:\, E:\ drives.

From the end-user perspective, they still see a traditional volume. However, the underlying technology is not a single drive initialized and formatted, or software RAID, but rather Storage Spaces which provides resiliency, availability and performance to the application running on top of these volumes.

This is a very basic architecture and you can do lot more on top of it such as clustered shared volumes and other services/roles of Windows Server 2012 and later.

Testing Environment

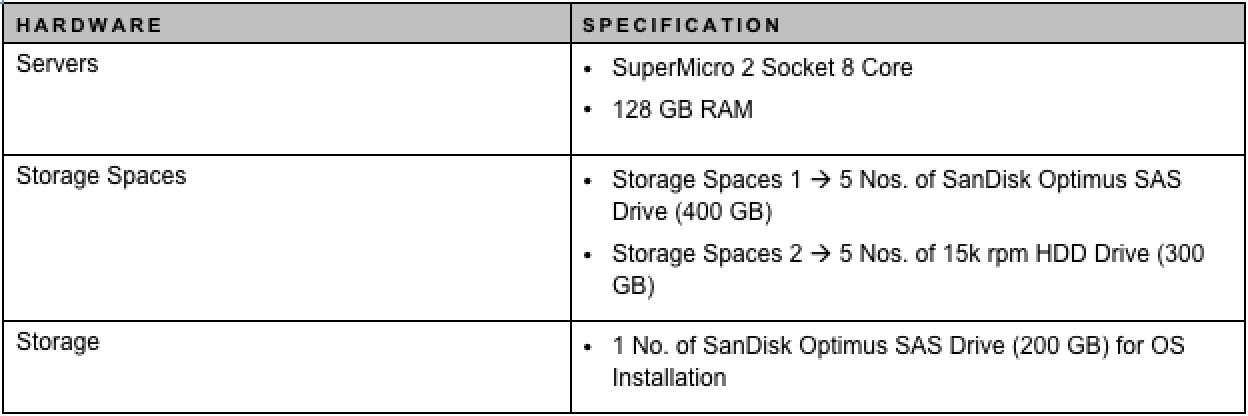

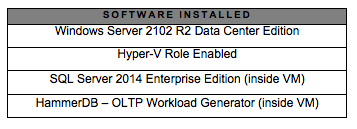

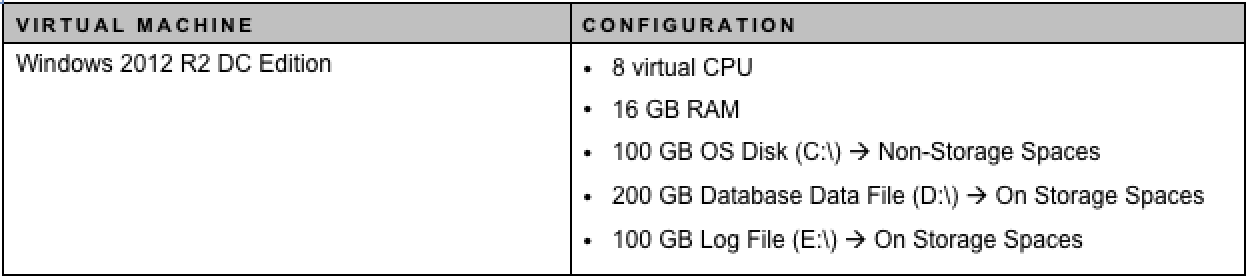

For simplicity of the tests, we created two storage pools: one with all-SSD and another with all-HDD with “simple spaces” option within one server. The Simple Spaces option stripes data across a set of pooled disks, and is not resilient to any disk failures. This is suitable for high-performance workloads where resiliency is either not necessary, or is provided by the application.

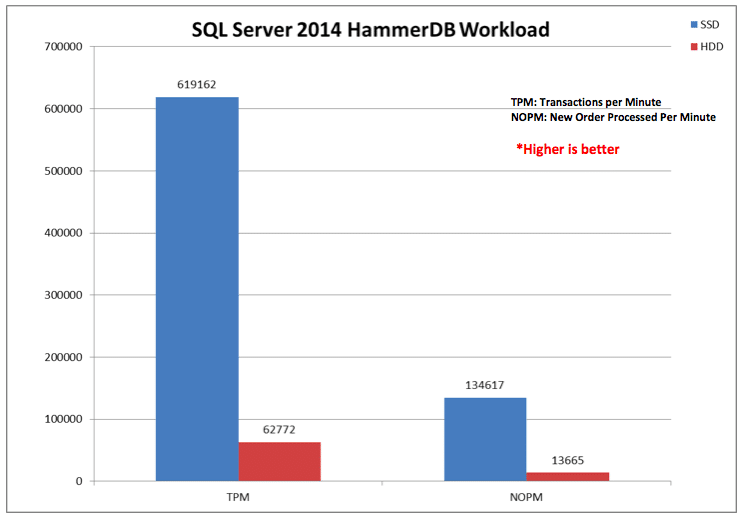

We created a single Hyper-V VM on this storage spaces volume and SQL Server 2014 was installed inside the VM. We created a single database file of 200 GB in the VM, and used the HammerDB tool to drive a TPC-C like workload profile as provided by this tool with the SQL server default instance. We ran the test for two hours and captured the TPM and NOPM data provided by HammerDB at the end of the test.

The following table describes the system hardware, VM configurations and software used in the testing:

Testing Results

The two hours HammerDB test results are shown below:

As you can see the TPM and NOPM numbers are 10X better when flash is used in the Storage Spaces solution. This means SSD solution can do 10X better performance out of the box. This is a huge performance gain in such a small test. It indicates that in actual deployment, the benefits are going to be manifold.

Conclusion:

There are tons of great materials, blogs, and papers available that talk about Storage Spaces and Scale Out File Server (SOFS) to great detail. This is another great software-defined storage option and an approach towards hyper-converged solution.

Though in testing, I used apples to apples configuration for HDD and SSD, my past experience with other solutions is that similar application performance can be achieved with a smaller number of flash drives. Moreover, with the cost of flash coming down, without compromising in performance, we will see that with similar hardware, just replacing magnetic media with flash would increase the value of SDS solution manifold.

SDS is great approach to address performance, availability and data services needs. But it’s important to realize that the underlying storage hardware contributes significantly to application performance. Without flash, SDS cannot reap the architecture’s performance benefits.

I will continue my research with other Storage Spaces options such as “Mirror” and “Parity” as well as other areas and will share my findings on this blog. If you have any questions, you can reach me at biswapati.bhattacharjee@sandiskoneblog.wpengine.com