As NVMe standards, technology and adoption evolve, we continue to update this article and expand this series. Since we last updated it in 2018, there have been a few developments. Let’s get started.

NVMe™ (Non-Volatile Memory Express) is a new protocol for accessing high-speed storage media that brings many advantages compared to legacy protocols. But what is NVMe and why is it important for data-driven businesses?

As businesses contend with the perpetual growth of data, they need to rethink how data is captured, preserved, accessed and transformed. Performance, economics and endurance of data at scale is paramount. NVMe is having a great impact on businesses and what they can do with data, particularly Fast Data for real-time analytics and emerging technologies.

In this blog post I’ll explain what NVMe is and share a deep technical dive into how the storage architecture works. Upcoming blogs will cover what features and benefits it brings businesses and use cases where it’s being deployed today and how customers take advantage of Western Digital’s NVMe SSDs, platforms and fully featured flash storage systems for everything from IoT Edge applications to personal gaming.

My work has been associated with data storage protocols, in some way or the other, for more than a decade. I have worked on enterprise PCIe SSD product management and long-term storage technology strategy, watching the evolution of storage devices from up-close. I am incredibly excited about the transformation NVMe is bringing to data centers, and the unique capability of Western Digital to deliver innovation up and down the stack. NVMe is opening a new world of possibilities by letting you do more with data! Here’s why:

The Evolution of NVMe

The first flash-based SSDs leveraged legacy SATA/SAS physical interfaces, protocols, and form factors to minimize changes in the existing hard drive (HDD)-based enterprise server/ storage systems. However, none of these interfaces and protocols were designed for high-speed storage media (i.e. NAND and/ or persistent memory). Because of the interface speed, performance of the new storage media, and proximity to the CPU, PCI Express (PCIe) was the next logical storage interface.

PCIe slots directly connect to the CPU providing memory-like access and can run a very efficient software stack. However, early PCIe interface SSDs did not have industry standards nor enterprise features. PCIe SSDs leveraged proprietary firmware, which was particularly challenging for system scaling for various reasons, including: a) running and maintaining device firmware, b) firmware/ device incompatibilities with different system software, c) not always making best use of available lanes and CPU proximity, and d) lack of value-add features for enterprise workloads. The NVMe specifications emerged primarily because of these challenges.

What is NVMe?

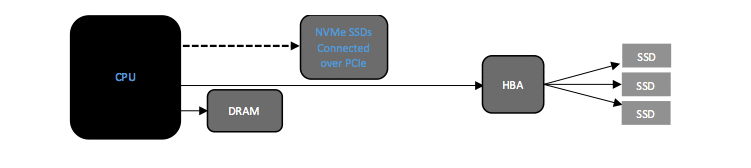

NVMe is a high-performance, NUMA (Non Uniform Memory Access) optimized, and highly scalable storage protocol, that connects the host to the memory subsystem. The protocol is relatively new, feature-rich, and designed from the ground up for non-volatile memory media (NAND and Persistent Memory) directly connected to CPU via PCIe interface (See diagram #1). The protocol is built on high speed PCIe lanes. PCIe Gen 3.0 link can offer transfer speed more than 2x than that of SATA interface.

The NVMe Value Proposition

The NVMe protocol capitalizes on parallel, low latency data paths to the underlying media, similar to high performance processor architectures. This offers significantly higher performance and lower latencies compared to legacy SAS and SATA protocols. This not only accelerates existing applications that require high performance, but it also enables new applications and capabilities for real-time workload processing in the data center and at the Edge.

Conventional protocols consume many CPU cycles to make data available to applications. These wasted compute cycles cost businesses real money. IT infrastructure budgets are not growing at the pace of data and are under tremendous pressure to maximize returns on infrastructure – both in storage and compute. Because NVMe can handle rigorous application workloads with a smaller infrastructure footprint, organizations can reduce total cost of ownership and accelerate top line business growth.

NVMe Architecture – Understanding I/O Queues

Let’s take a deeper dive into NVMe architecture and how it achieves high performance and low latency. NVMe can support multiple I/O queues, up to 64K with each queue having 64K entries. Legacy SAS and SATA can only support single queues and each can have 254 & 32 entries respectively. The NVMe host software can create queues, up to the maximum allowed by the NVMe controller, as per system configuration and expected workload. NVMe supports scatter/gather IOs, minimizing CPU overhead on data transfers, and even provides the capability of changing their priority based on workload requirements.

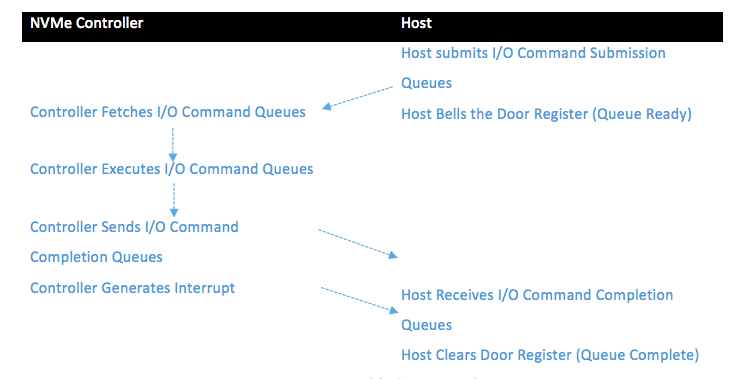

The picture below (diagram #2) is a very simplified view of the communication between the Host and the NVMe controller. This architecture allows applications to start, execute, and finish multiple I/O requests simultaneously and use the underlying media in the most efficient way to maximize speed and minimize latencies.

How Do NVMe Commands Work?

The way this works is that the host writes I/O Command Queues and doorbell registers (I/O Commands Ready Signal); the NVMe controller then picks the I/O Command Queues, executes them and sends I/O Completion Queues followed by an interrupt to the host. The host records I/O Completion Queues and clears door register (I/O Commands Completion Signal). See diagram #2. This translates into significantly lower overheads compared to SAS and SATA protocols.

Why NVMe Gets the Most Performance from Multicore Processors

As I mentioned above, NVMe is a NUMA-optimized protocol. This allows for multiple CPU cores to share the ownership of queues, their priority, as well as arbitration mechanisms and atomicity of the commands. As such, NVMe SSDs can scatter/ gather commands and process them out of turn to offer far higher IOPS and lower data latencies.

NVMe Form Factor and Standards

The NVMe specification is a collection of standards managed by a consortium, which is responsible for its development. It is currently the industry standard for PCIe solid state drives for all form factors. These include form factors such as standard 2.5” U.2 form factor, internal mounted M.2, Add In Card (AIC), and various EDSFF form factors.

There are many interesting developments happening on added features to the standard, like multiple queues, combine IOs, define ownership and prioritization process, multipath and virtualization of I/Os, capture asynchronous device updates, and many other enterprise features that have not existed before. My next blog goes into depth about these features and how they are opening up new possibilities for data-driven businesses.

We’re seeing the standard used in more use cases. One example is Zoned Storage and ZNS SSDs. NVMe Zoned Namespace (ZNS) is a technical proposal under consideration by the NVM Express organization. It came about to contend with massive data management in large-scale infrastructure deployments, by moving the intelligent data placement from the drive to the host. To do so it divides the LBA of a namespace into zones and that must be written sequentially and if written again must be explicitly reset. The specification introduces a new type of NVMe drive that provide several benefits over traditional NVMe SSDs such as:

- Higher performance through write amplification reduction

- Higher capacities by lower over-provisioning

- Lower costs due to reduced SSD controller DRAM footprint

- Improved latencies

Another interesting use case is the SD™ and microSD™ Express card, which marries the SD and microSD Card with PCIe and NVMe interfaces – see here. This is an example of the capabilities of the next generation of high-performance mobile computing.

Lastly, the NVMe protocol is not limited to simply connecting flash drives, it may also be used as a networking protocol, or NVMe over Fabrics. This new networking protocol enables a high performance storage networking fabric a common frameworks for a variety of transports.

Why is NVMe Important for your Business?

Enterprise systems are generally data starved. The exponential rise in data and demands from new applications can bog down SSDs. Even high-performance SSDs connected to legacy storage protocols can experience lower performance, higher latencies, and poor quality of service when confronted with some of the new challenges of Fast Data. NVMe’s unique features help to avoid the bottlenecks for everything from traditional scale-up database applications to emerging Edge computing architectures and scale to meet new data demands.

Designed for high performance and non-volatile storage media, NVMe is the only protocol that stands out in highly demanding and compute intensive enterprise, cloud and edge data ecosystems.

I hope this blog has helped you understand what NVMe is, and why it’s so important. You can continue reading my next blog that walks through some interesting NVMe features for edge and cloud data centers. Or, see our range of NVMe SSDs with low latency and maximum throughput.