Last year, scientists at the U.S. National Institute of Standards and Technology (NIST) Hardware for AI program, their University of Maryland colleagues, and collaborators from Western Digital built a neural network that aced a wine-tasting test. A better-than-human sommelier wasn’t the project’s goal, though; its goal was to explore new ways of addressing AI’s power consumption challenges.

A thirst for energy

AI is having a moment, leaving the turf of data science and dazzling the world with large language models (LLM) like ChatGPT (and its many companions) and AI image generators. But it’s not all rosy in the generative-AI frenzy. Aside from hallucinations, ethical concerns, and perilous risks as AI becomes more sophisticated and autonomous, AI also has a power problem.

It’s unclear exactly how much energy is needed to run the world’s AI models. One data scientist trying to tackle the question ended up with an estimate that ChatGPT’s electricity consumption was between 1.1M and 23M KWh in January 2023 alone. There’s a lot of room between 1.1 million and 23 million, but it’s safe to say AI requires an obtuse and immense amount of energy. Incorporating large language models into search engines could mean up to a fivefold increase in computing power. Some even warn machine learning is on track to consume all energy being supplied.

Brian Hoskins, a physicist in NIST’s Alternative Computing Group and advisor in the CHIPS R&D Office helping the U.S. respond to the CHIPS and Science Act, explained that one of the challenges is simply that, so far, the larger deep neural nets can scale, the better they perform.

“If you just keep throwing more and more compute power and resources into these networks and scale their parameters, they just keep doing better,” said Hoskins. “But we’re burning up more and more energy. And if you ask the question of how big can we make these networks? How many resources can we invest in them? You realize we really start to run into the physical limits.”

The von Neumann bottleneck

Hoskins and his colleague Jabez McClelland, a NIST physicist and leader of the Alternative Computing Group, believe it’s time to rethink computing architecture. “You know, we’ve been doing computers in one way ever since von Neumann came up with this model,” said McClelland.

McClelland refers to John von Neumann, the prodigy who, among many other contributions, helped pioneer the modern computer. Almost all modern computing has relied on the von Neumann architecture, but McClelland explains that neural nets stress its drawbacks.

“In this [von Neumann] architecture, you have memory over here, and you have the processor over there, and for every computation, you have to send the data between them,” he said. “That involves driving a current through [lots of wires]. The problem is that every time you send a current, you’re expending energy.”

A large language model like GPT can have hundreds of billions or even trillions of parameters. Information is constantly cycled back and forth, moving through fleets of GPUs and consuming energy with every step.

“We’re looking for ways to reimagine computing so that you can connect things in different ways,” said McClelland.

Their inspiration? The human brain. “Our brains are doing all this processing, and they can do so much more than a computer does and with such small amounts of power,” he said.

McClelland explained that one of the reasons the brain is so efficient is that, unlike the von Neumann architecture, processing and memory happen in the same place. Now, Hoskins and McClelland needed to find a technology that could do that, too.

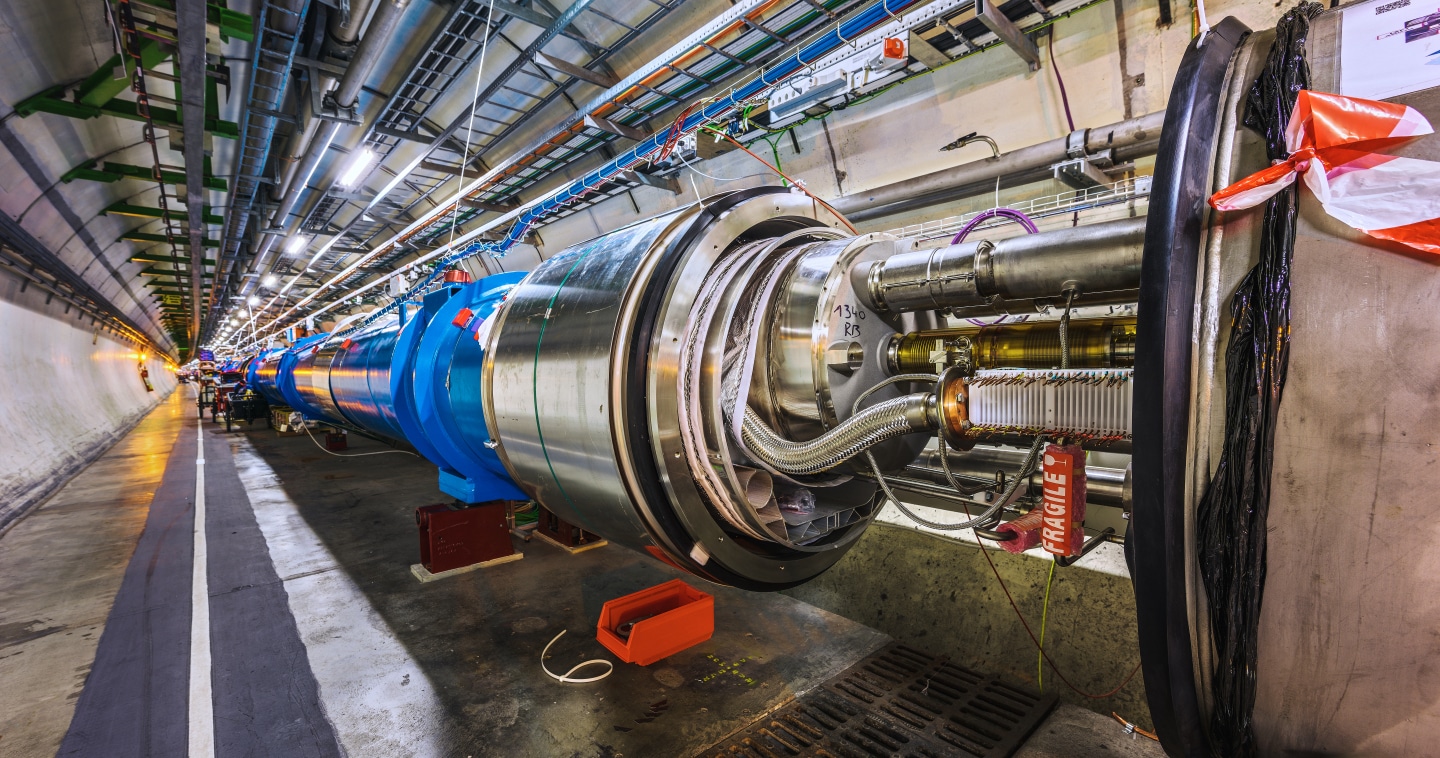

Hello, Magnetic Tunnel Junction

Hoskins and McClelland came to the idea of building a neural net array using Magnetic Tunnel Junction (MTJ) technology through NIST’s research collaborations with Western Digital. The company has used MTJ technology in hard drive read heads for several decades, and it’s advancing the technology in Magnetic Random Access Memory (MRAM), one of several next-generation memory technologies.

One of the experts involved in the project is Patrick Braganca, a technologist in research and development at Western Digital whose career has focused on advancing and fabricating nanomagnetic devices, particularly MTJs.

“MTJs take advantage of a quantum mechanics property called spin, which you can think of like a North Pole and a South Pole of an electron,” he explained.

How MTJs work is by leveraging ferromagnetic materials, materials in which the spin of atoms’ uncoupled electrons will align with neighboring electrons, and sandwiching two ferromagnetic layers with an insulating layer between them. One layer will have a predetermined spin direction, while the other can be altered by applying a magnetic field.

Then comes the wonderfully weird quantum mechanics part: When sending a current through the sandwich, electrons will pass through the insulating barrier—analogous to a baseball passing through a brick wall. This phenomenon is called tunneling (hence the T in MTJ), and when combined with the properties of the ferromagnetic layers, more electrons will flow through the sandwich when the spins of the layers are aligned, resulting in larger currents than when the layers are anti-aligned.

“MTJs are like a faucet that you can open and close with a magnetic field,” said Braganca. “The resistance effectively tells you how much current gets through [a lot or a little], and we can translate that to a 1 or a 0.”

For Hoskins and the team, MTJs offered a balance between known computing processes and that X-factor they were looking for.

“Most digital computing is bitwise, meaning it works with individual ones and zeros,” said Hoskins. “Magnetic Tunnel Junctions plug very well into our existing understanding of how computers currently work. By using the very natural property of MTJ physics and Ohm’s Law, which multiplies a voltage by conductance and gives you a current, we’ve turned memory elements into multipliers; multipliers that can store the number they’re multiplying forever.”

In other words, Hoskins and team saw a way for MTJs to merge computing and memory into one. But they needed to prove it actually worked.

The math of wine tasting

To test their mathematical theory, Hoskins and team developed 300 solutions and began experimenting with a 15 x 15 MTJ array, which limited the size of the neural network to 15 inputs or fewer.

“We decided to use a publicly available wine database,” said Hoskins. “Each wine had 13 attributes, such as acidity, alcohol concentration, color intensity, etc., and each was given a numerical value that we could train the system with.”

While connoisseurs may scoff at the idea of numeral wine tasting, our brains do something very similar. Our neural networks’ synapses process a wine’s properties and weigh them to conclude if it is lush, briary, complex, or just aggressively flamboyant.

The database the team leveraged included 178 wines. One hundred and forty-eight wines were used to train the AI “palate,” and on the 30 wines the AI hadn’t seen before, the system passed with 95.3% accuracy.

“Even the best AI will never get you 100% results,” said Hoskins. “The fact that we got 95% was telling us, yeah, this is working. It’s a viable solution, with potential for scale and improvement.”

The experiments also proved something Hoskins is passionate about: the need for experimentation. “I like to say that thinking is the enemy of science. There’s no substitute for experiment because whenever we go in the lab, and we try and do something that’s never been done before, what we’re going to find is that our expectations are not met, that there are things that we don’t know about,” he said.

Building the array, the team found that each nanodevice can have a certain degree of variability and different resistance in the tiny wires that connect them, which they didn’t anticipate.

“We solved some of this through mathematical models; but it also comes back to why we need the technical and engineering expertise of an organization like Western Digital to make it work and be successful,” said Hoskins.

What’s next?

The experiment’s success has the teams underway building an improved array with thousands of MTJ devices. It’s too early to share details, but one can expect an application more complex than a virtual wine steward.

Hoskins and McClelland see large MTJ arrays as a potential stepping stone to saving energy in large computational models. They also see MTJ arrays opening new computing possibilities at the edge, in tiny sensors, and battery-operated devices, even cars—the less energy consumed by computing in an electric vehicle, the more miles it can traverse.

As the tech industry contends with AI’s potential rewards and risks, curbing energy consumption will remain an existential matter. And in this age of AI, where technology permeates nearly every part of our lives, it’s interesting that scientists and technologists like Hoskins, McClelland, and Braganca are turning to the natural world for inspiration.

“A computer can play Go and even beat humans,” said McClelland. “But it will take a computer something like 100 kilowatts to do so while our brains do it for just 20 watts.”

“The brain is an obvious place to look for a better way to compute AI.”

—

What does brain-like computing look like? Learn more about neuromorphic computing in our upcoming articles.