The “Invisible” Technology Behind AI

You’d be hard pressed to find a topic that’s hotter in the world of technology than artificial intelligence, or AI. Virtually every company in the tech world (and many outside of it!) seems to be touting the addition of AI-based features to their new devices, applications, or services. Of course, it’s easy to understand why. AI promises to bring a new level of “smarts” to our devices, helping them better understand the context around them, provide new types of insights, and even automate some of the mundane tasks that we all must do on a regular basis

At the same time, AI has also received a lot of attention because of the concerns that some people have raised about the potential downsides of the technology. Visions of autonomous machines run amok, a la science fiction movies, can’t help but give you a bit of the willies.

Technology Behind AI Bringing Data to Life

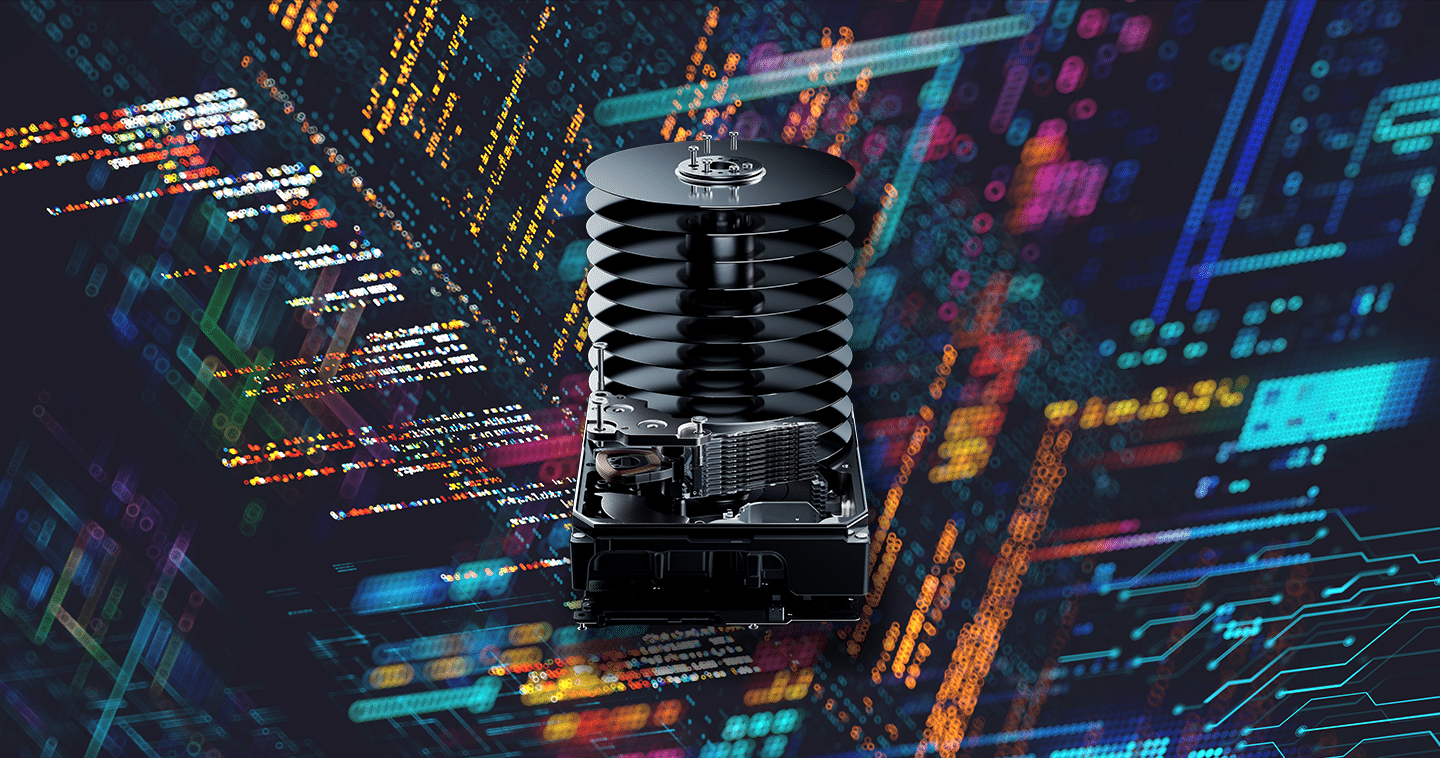

Regardless of where you sit on the spectrum of viewpoints towards AI, however, there’s no question that it’s a force to be reckoned with in the here and now. It’s also important to recognize that despite the seemingly “invisible” nature of the technology behind AI, it’s going to take a lot of storage, computing and hardware to bring data to life.

AI Demands Significant Resources for the Magic to Happen

AI Demands Significant Resources for the Magic to Happen

From portable devices to edge computing nodes to cloud-based data centers, AI demands a tremendous amount of resources to allow its magic to happen. In fact, AI and the related area of machine learning (ML) are probably some of the most demanding applications now being run by consumers, businesses and their technology providers. Large distributed computing architectures are necessary to handle everything from the initial neural network model training that sits at the heart of most AI applications, through the inferencing tasks that trigger the actions suggested by the AI models. The training is almost exclusively now done in large cloud-based data centers, while the inferencing tasks (which detect the data or patterns that trigger an action) can run on devices, in the cloud, or on a combination of the two.

[TWEET “What’s the magic behind #AI that brings #data to life?”]

Compute and Storage in the Data Center

Throughout all aspects of the AI model creation and usage process, there are tremendous amounts of computing power, enormous data sets, and—of course—significant amounts of storage to hold that data. In fact, a combination of having access to as well as analyzing the huge amounts of data is an essential part of how AI algorithms are built, maintained and updated. This is one of the key reasons why the machine learning/AI training process occurs in large data centers—these applications need access to the kinds of massive computing and storage that these locations and facilities offer.

These days, however, it goes beyond the amount of storage and processing power these tasks require. For AI to be effective, the technology behind AI needs to enable real-time processes, which means that all the components of the infrastructure behind it need to act or react very quickly. In the case of the storage elements, it manifests a strong argument for leveraging speedy flash-based storage for the critical tasks. In fact, the storage requirements for AI training can be so demanding for some applications that not even all types of flash storage can handle it—only the variants that are optimized for real-time analytics.

Fast Data at the Edge

AI’s demands on storage and computing also extend beyond data centers. As mentioned earlier, much of the magical impact of AI occurs when a device senses a pattern of data (anything from an image, to a voice, to a video feed, to a set of data) and invokes a response that the trained AI model suggests is the appropriate action to take based on the input (inferencing). For example, a digital assistant running on a smartphone might be tracking real-time traffic updates that have an impact on when someone needs to leave to arrive at a meeting on time and, based on the information, send a notification to that effect. Or a smart speaker might detect the voice of a particular child in a given household and respond by playing back that child’s favorite music.

In these and millions of other potential cases, there are real-time data inputs coming into a device and the device’s local computing and storage are used to process that data and generate a reaction. At the moment, the reaction may be little more than sending along some data to the cloud, where a more sophisticated algorithm can process the results and send back the appropriate response. Over time, however, one of the key advancements in AI will be the ability to do more of the data processing necessary for inferencing on the local device. To achieve that will require even more computing and storage components on these endpoints that have the capability and speed necessary to meet AI applications’ demanding requirements.

Looking ahead, there’s no question that AI is an extremely exciting new technology development that’s going to both extend the usefulness of our existing devices, and bring about new kinds of devices and services that we can’t even conceive of yet. The technology behind AI brings data to life. By making our devices “smarter” about data, and more aware of the context or environment in which they find themselves AI can bring a whole new level of capability that’s going to drive key technological innovations for decades to come.

Bob O’Donnell is the president and chief analyst of TECHnalysis Research, LLC a market research firm that provides strategic consulting and market research services to the technology industry and professional financial community. You can follow him on Twitter @bobodtech.