Machine Learning was a concept that existed for decades. Yet only recent advancements in software, processing and massive, rich datasets have made it a reality. It’s no longer just an academic experiment, but a technology with meteoric rise that every industry and business is looking to leverage. So how far are we on path to realization? Here is a look at seven top machine learning trends:

1. There’s No Going Back

Perhaps the most significant Machine Learning trend was the fact that Machine Learning moved from training models to applying them in reality, utilizing current datasets, accessing historical data and even enriching with external datasets. Machine Learning has matured and production use cases are very real[1].

2. We Are Often Stuck at Step #1 – Data Management

Everyone is eager to get their training models started, but many are finding out weeks, or even months later that invalid data can propagate issues throughout the entire system.[2] Whether it’s outages or ultimately bad models, to make Machine Learning work well a great deal of work needs to happen with the initial preparation of data. Finding out where the data is (likely on multiple storage systems, in diverse locations with varying characteristics), standardizing access, normalizing metadata, identifying dependencies, using data validation techniques and learning how to treat errors is the key to making Machine Learning successful.

3. The World Looks Different, Depends on Where You Are

If we look at the global picture, we see different countries at different places on their Machine Learning adoption journey, and they face very different challenges. Europe started early and has moved beyond testing to predicting real-world use cases. Yet data privacy laws require a lot more governance and processes to cleanse the data. In the US, private companies are at the forefront of driving innovation. We see commercial companies collecting data focused on profit-making areas and industries. Beyond that is China, whose government created the goal of becoming “the world’s primary AI innovation center”.[3] Although they started their Machine Learning and AI journey much later, the benefit of being late to the game is that their infrastructure is new, well-funded, and they consolidate data from the start. It’s also a country awash with data and mobile commerce.[4]

4. Data Lineage Needs Version Control

In Machine Learning every step is iterative. Data scientists spend so much time tuning their algorithm; it’s critical to be able to repeat the best outcome. The problem is that it’s very easy to go back and look at source code with source code version control systems, or even share it between different team members. However, the test dataset that you used is sometimes very hard to replicate. Datasets can grow or change rapidly. What you access today may not be the same as what your colleague worked with yesterday. So how do we create lineage? Object storage, whether on premises or in the cloud, typically has a feature called versioning. Versioning allows to create an archived version of objects with a timestamp, which can be restored at any time. From scaling, to metadata and versioning, object storage offers a more complete solution for the bulk of data.

5. From Data Lakes to Data Hubs

We have to accept the fact that total data consolidation won’t work. Data has simply become too large and dispersed to move. Organizations instead have data in multiple geographic locations, both on premises and in the cloud, on different systems and devices. Instead of trying to consolidate it in one place, some organizations are creating a hub from remote sources. As such, software that enables governance, authentication services, and unified access to data is key to these advances.

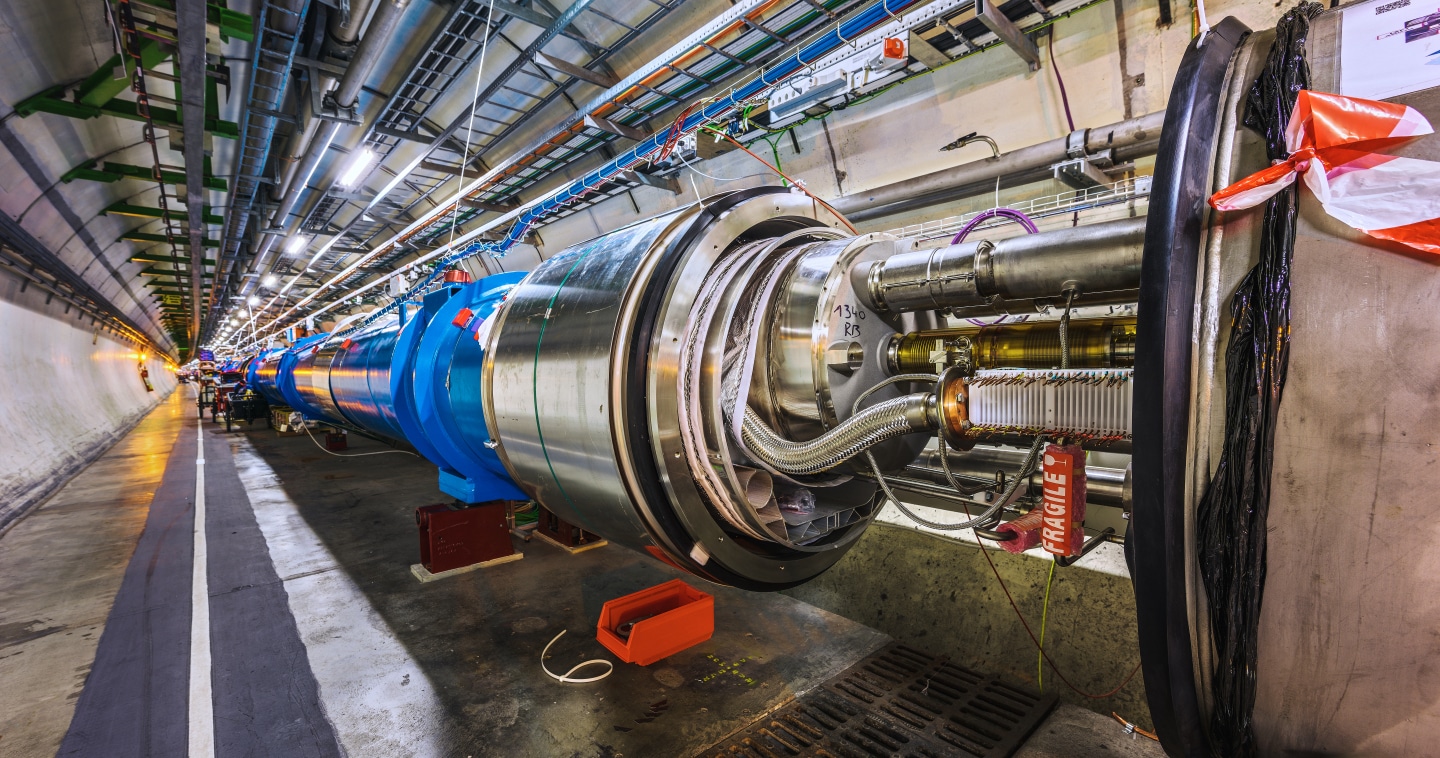

6. Computing and Memory Need to Scale Even Further, in New Ways

The rise of Machine Learning came about by overcoming old hardware limitations. This trend will continue in new areas. Whether it’s the move towards multi-threaded GPUs, open source computing based on RISC-V ISA[5], or new solutions that are augmenting DRAM like memory extension drives, we’ll see new ways to train and use data faster.

7. More Data is Needed

With more data, and more diverse data, machine learning can see better results. We expect to see many organizations expanding their data sets by purchasing data in new ways. This will allow more businesses to use broader sets of data, and to monetize their own IoT data. Based on initial projections, by 2030, blockchain-enabled IoT data marketplaces revenue could reach $4.4 billion. The market value of the data being transacted via these exchanges could rise to $3.6 trillion by 2030— by which time, more than 1 million organizations would be monetizing their IoT data assets.[6] Read more about the Dawn of the Data Marketplace.

Big Data, Fast Data at SuperComputing

If you’re headed to SuperComputing 2018 make sure to join us in booth #3901 to see how our big data and fast data solutions are accelerating innovation. Join our speaking sessions, in-booth theater presentations and demos, including:

- Petabyte-scale analytics – see how to easily scale Machine Learning and Data Lakes with the ActiveScale™ object storage system.

- Composable Fabric-Attached Storage – See our high-performance virtual DAS with a powerful ISV ecosystem.

- Get to know industry leading capacity and performance devices for HPC, with industry-leading power efficiency. Scaling performance and capacity has never been more reliable or cost-effective.

We’ll also join our partners Globus and iRODS at the event to show an end-to-end data hub solution leverage object storage versioning at petabyte-scale.

Forward-Looking Statements

Certain blog and other posts on this website may contain forward-looking statements, including statements relating to expectations for our product portfolio, the market for our products, product development efforts, and the capacities, capabilities and applications of our products. These forward-looking statements are subject to risks and uncertainties that could cause actual results to differ materially from those expressed in the forward-looking statements, including development challenges or delays, supply chain and logistics issues, changes in markets, demand, global economic conditions and other risks and uncertainties listed in Western Digital Corporation’s most recent quarterly and annual reports filed with the Securities and Exchange Commission, to which your attention is directed. Readers are cautioned not to place undue reliance on these forward-looking statements and we undertake no obligation to update these forward-looking statements to reflect subsequent events or circumstances.

[1]https://www.digitalistmag.com/digital-economy/2018/05/09/machine-learning-in-real-world-06166003

[2]https://ai.google/research/pubs/pub46178

[3]http://www.sciencemag.org/news/2018/02/china-s-massive-investment-artificial-intelligence-has-insidious-downside

[4]https://mobilepaymentconference.com/why-china-leads-the-world-in-mobile-payments/

[6]https://datamakespossible.westerndigital.com/value-of-data-2018/dawn-of-data-marketplace/